Squash began as a crazy dream.

Soon after I started PolitiFact in 2007, readers began suggesting a cool but far-fetched idea. They wanted to see our fact checks pop up on live TV.

That kind of automated fact-checking wasn’t possible with the technology available back then, but I liked the idea so much that I hacked together a PowerPoint of how it might look. It showed a guy watching a campaign ad when PolitiFact’s Truth-O-Meter suddenly popped up to indicate the ad was false.

It took 12 years, but our team in the Duke University Reporters’ Lab managed to make the dream come true. Today, Squash (our code name for the project, chosen because it is a nutritious vegetable and a good metaphor for stopping falsehoods) has been a remarkable success. It displays fact checks seconds after politicians utter a claim and it largely does what those readers wanted in 2007.

But Squash also makes lots of mistakes. It converts politicians’ speech to the wrong text (often with funny results) and it frequently stays idle because there simply aren’t enough claims that have been checked by the nation’s fact-checking organizations. It isn’t quite ready for prime time.

As we wrap up four years on the project, I wanted to share some of our lessons to help developers and journalists who want to continue our work. There is great potential in automated fact-checking and I’m hopeful that others will build on our success.

When I first came to Duke in 2013 and began exploring the idea, it went nowhere. That’s partly because the technology wasn’t ready and partly because I was focused on the old way that campaign ads were delivered — through conventional TV. That made it difficult to isolate ads the way we needed to.

But the technology changed. Political speeches and ads migrated to the web and my Duke team partnered with Google, Jigsaw and Schema.org to create ClaimReview, a tagging system for fact-check articles. Suddenly we had the key elements that made instant fact-checking possible: accessible video and a big database of fact checks.

I wasn’t smart enough to realize that, but my colleague Mark Stencel, the co-director of the Reporters’ Lab, was. He came into my office one day and said ClaimReview was a game changer. “You realize what you’ve done, right? You’ve created the magic ingredient for your dream of live fact-checking.” Um … yes! That had been my master plan all along!

Fact-checkers use the ClaimReview tagging system to indicate the person and claim being checked, which not only helps Google highlight the articles in search results, it also makes a big database of checks that Squash can tap.

It would be difficult to overstate the technical challenge we were facing. No one had attempted this kind of work beyond doing a demo, so there was no template to follow. Fortunately we had a smart technical team and some generous support from the Knight Foundation, Craig Newmark and Facebook.

Christopher Guess, our wicked-smart lead technologist, had to invent new ways to do just about everything, combining open-source tools with software that he built himself. He designed a system to ingest live TV and process the audio for instant fact-checking. It worked so fast that we had to slow down the video.

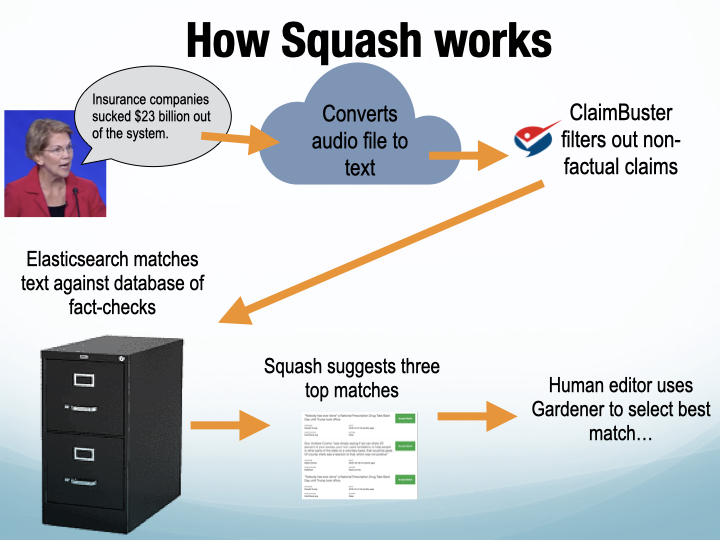

To reduce the massive amount of computer processing, a team of students led by Duke computer science professor Jun Yang came up with a creative way to filter out sentences that did not contain factual claims. They used ClaimBuster, an algorithm developed at the University of Texas at Arlington, to act like a colander that kept only good factual claims and let the others drain away.

Today, this is how Squash works: It “listens” to a speech or debate, sending audio clips to Google Cloud that are converted to text. That text is then run through ClaimBuster, which identifies sentences the algorithm believes are good claims to check. They are compared against the database of published fact checks to look for matches. When one is found, a summary of that fact check pops up on the screen.

The first few times you see the related fact check appear on the screen, it’s amazing. I got chills. I felt was getting a glimpse of the future. The dream of those PolitiFact readers from 2007 had come true.

But …

Look a little closer and you will quickly realize that Squash isn’t perfect. If you watch in our web mode, which shows Squash’s AI “brain” at work, you will see plenty of mistakes as it converts voice to text. Some are real doozies.

Last summer during the Democratic convention, former Iowa Gov. Tom Vilsack said this: “The powerful storm that swept through Iowa last week has taken a terrible toll on our farmers ……”

But Squash (it was really Google Cloud) translated it as “Armpit sweat through the last week is taking a terrible toll on our farmers.”

Squash’s matching algorithm also makes too many mistakes finding the right fact check. Sometimes it is right on the money. It often correctly matched then-President Donald Trump’s statements on China, the economy and the border wall.

But other times it comes up with bizarre matches. Guess and our project manager Erica Ryan, who spends hours analyzing the results of our tests, believe this often happens because Squash mistakenly thinks an individual word or number is important. (Our all-time favorite was in our first test, when it matched a sentence by President Trump about men walking on the moon with a Washington Post fact-check about the bureaucracy for getting a road permit. The match occurred because both included the word years.)

To reduce the problem, Guess built a human editing tool called Gardener that enables us to weed out the bad matches. That helps a lot because the editor can choose the best fact check or reject them all.

The most frustrating problem is that a lot of time, Squash just sits there, idle, even when politicians are spewing sentences packed with factual claims. Squash is working properly, Guess assures us, it just isn’t finding any fact checks that are even close. This happened in our latest test, a news conference by President Joe Biden, when Squash could muster only two matches in more than an hour.

That problem is a simple one: There simply are not enough published fact checks to power Squash (or any other automated app).

We need more fact checks – As I noted in the previous section, this is a major shortcoming that will hinder anyone who wants to draw from the existing corpus of fact checks. Despite the steady growth of fact-checking in the United States and around the world, and despite the boom that occurred in the Trump years, there simply are not enough fact checks of enough politicians to provide enough matches for Squash and similar apps.

We had our greatest success during debates and party conventions, events when Squash could draw from a relatively large database of checks on the candidates from PolitiFact, FactCheck.org and The Washington Post. But we could not use Squash on state and local events because there simply were not enough fact-checks for possible matches.

Ryan and Guess believe we need dozens of fact checks on a single candidate, across a broad range of topics, to have enough to make Squash work.

More armpit sweat is needed to improve voice to text – We all know the limitations of Siri, which still translates a lot of things wrong despite years of tweaks and improvements by Apple. That’s a reminder that improving voice-to-text technology remains a difficult challenge. It’s especially hard in political events when audio can be inconsistent and when candidates sometimes shout at each other. (Identifying speakers in debates is yet another problem.)

As we currently envision Squash and this type of automated fact-checking, we are reliant on voice-to-text translations, but given the difficulty of automated “hearing,” we’ll have to accept a certain error level for the foreseeable future.

Matching algorithms can be improved – This is one area that we’re optimistic about. Most of our tests relied on off-the-shelf search engines to do the matching, until Guess began to experiment with a new approach to improve the matching. That approach relies on subject tags (which unfortunately are not included in ClaimReview) to help the algorithm make smarter choices and avoid irrelevant choices.

The idea is that if Squash knows the claim is about guns, it would find the best matches from published fact checks that have been tagged under the same subject. Guess found this approach promising but did not get a chance to try the approach at scale.

Until the matching improves, we’ve found humans are still needed to monitor and manage anything that gets displayed — as we did with our Gardener tool.

Ugh, UX – The simplest part of my vision, the Truth-O-Meter popping up on the screen, ended up being one of our most complex challenges. Yes, Guess was able to make the meter or the Washington Post Pinocchios pop up, but what were they referring to? This question of user experience was tricky in several ways.

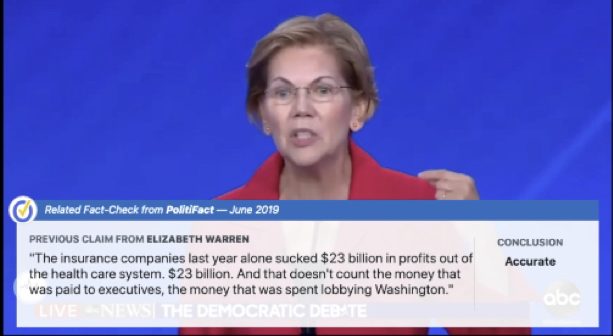

First, we were not providing an instant fact check of the statement that was just said. We were popping up a summary of a related fact check that was previously published. Because politicians repeat the same talking points, the statements were generally similar and in some cases, even identical. But we couldn’t guarantee that, so we labeled the pop-up “Related fact-check.”

Second, the fact check appeared during a live, fast-moving event. So we realized it could be unclear to viewers which previous statement the pop-up referred to. This was especially tricky in a debate when candidates traded competing factual claims. The pop-up could be helpful with either of them. But the visual design that seemed so simple for my PowerPoint a decade earlier didn’t work in real life. Was that “False” Truth-O-Meter for the immigration statement Biden said? Or the one that Trump said?

Another UX problem: To give people time to read all the text (the related fact checks sometimes had lengthy statements), Guess had them linger on the screen for 15 seconds. And our designer Justin Reese made them attractive and readable. But by the end of that time the candidates might have said two more factual claims, further confusing viewers that saw the “False” meter.

So UX wasn’t just a problem, it was a tangle of many problems involving limited space on the screen (What should we display and where? Will readers understand the concept that the previous fact check is only related to what was just said?), time (How long should we display it in relation to when the politician spoke?) and user interaction (Should our web version allow users to pause the speech or debate to read a related fact check?). It’s an enormously complicated challenge.

* * *

Looking back at my PowerPoint vision of how automated fact-checking would work, we came pretty close. We succeeded in using technology to detect political speech and make relevant fact checks automatically pop up on a video screen. That’s a remarkable achievement, a testament to groundbreaking work by Guess and an incredible team.

But there are plenty of barriers that make it difficult for us to realize the dream and will challenge anyone who tries to tackle this in the future. I hope others can build on our successes, learn from our mistakes, and develop better versions in years to come.

Comments closed