Fact deserts leave states vulnerable to election lies

Politicians in 29 states get little scrutiny for what they say, while local fact-checkers in other places struggle to keep pace with campaign misinformation.

By Mark Stencel, Erica Ryan and Belen Bricchi - November 16, 2022

Amid the political lies and misinformation that spread across the country throughout the 2022 midterm elections, statements by candidates in 29 states rarely faced the scrutiny of independent fact-checkers.

Why? Because there weren’t any local fact-checkers.

Even in the places where diligent local media outlets regularly made active efforts to verify the accuracy of political claims, the volume of questionable statements in debates, speeches, campaign ads and social posts far outpaced the fact-checkers’ ability to set the record straight.

An initial survey by Reporters’ Lab at Duke University identified 46 locally focused fact-checking projects during this year’s campaign in 21 states and the District of Columbia. That count is little changed in the national election years since 2016, when an average of 47 fact-checking projects were active at the state and local level.

Active State/Local Fact-Checkers in the U.S., 2003-22

There’s also been lots of turnover among local fact-checking projects over time. At least 40 projects have come and gone since 2010. And fact-checking is not always front and center, even among the news outlets that devote considerable effort and time to this reporting.

While some fact-checkers consistently produce reports from election to election, many others are campaign-season one-offs. And the overwhelming emphasis on campaign claims can produce a fact-vacuum after the votes are counted — when elected officials, party operatives and others in the political process continue to make erroneous and misleading statements.

Fact-checks also can be hard for readers and viewers to find — sometimes appearing only in a broader scroll of state political news, with little effort to make this vital reporting stand out or to showcase it on a separate page.

The Duke Reporters’ Lab conducted this initial survey to assess the state of local fact-checking during the 2022 midterm elections. The Lab first began tracking fact-checking projects across the United States and around the world in 2014 and maintains a global database and map of fact-checking projects.

While 29 states currently appear to have no fact-checking projects that regularly report on claims from politicians or social media at the local level, residents may encounter occasional one-off fact-checks from their state’s media outlets.

Among the states lacking dedicated fact-checking projects are four that had hotly contested Senate or governor races this fall — New Hampshire, Kansas, Ohio and Oregon.

The states with the most robust fact-checking in terms of projects based there include Texas with five outlets; Iowa and North Carolina with four; and Florida, Michigan and Wisconsin with three each.

Competition seems to generate additional fact-checking. States with active fact-checking projects tend to have at least two (14 states of the 21), while seven states and D.C. have a single locally focused project.

Local television stations are the most active fact-check producers. Of the outlets that generated fact-checks at the state and local level this year, more than half are local television stations. That’s a change over the past two decades, when newspapers and their websites were the primary outlets for local fact-checks.

Who Produces Local Fact-Checks?

Almost all local fact-checking projects are run by media outlets, while several are based at universities or nonprofit organizations. The university-related fact-checkers are Arizona State University’s Walter Cronkite School of Journalism and Mass Communication; The Daily Iowan, the University of Iowa’s independent student newspaper; and West Virginia University’s Reed College of Media. All three are state affiliates of PolitiFact, the prolific national fact-checking organization based at the nonprofit Poynter Institute in St. Petersburg, Florida.

National news partnerships and media owners drive a significant amount of local fact-checking. Of the 46 projects, almost a quarter are affiliated with PolitiFact, while another half-dozen are among the most active local stations participating in the Verify fact-checking project by TV company Tegna. In addition, five Graham Media Group television stations use a unified Trust Index brand at the local level.

One of the newest efforts to encourage local fact-checking is Repustar’s Gigafact, a non-profit project that partnered with three newsrooms to counter misinformation during the midterms. The Arizona Center for Investigative Reporting, The Nevada Independent and Wisconsin Watch produced “fact briefs,” which are short, timely reports that answer yes or no questions, such as “Is Nevada’s violent crime rate higher than the national average?”

Nearly 40 percent of the fact-checkers in the Lab’s count got their start since 2020, including 11 projects in that year alone. In contrast, the oldest fact-checker, WISC-TV in Madison, Wisconsin, began producing its Reality Check segments almost two decades ago, in 2004. It’s among 12 fact-checkers that have been active for 10 years or more.

Another new initiative launched in April to increase Spanish language fact-checking at the local level in the U.S. — but with the help of two prominent international fact-checking organizations.

Factchequeado, a partnership between Maldita.es of Spain and Chequeado in Argentina, has built a network of 27 allies, including 19 local news outlets in the U.S. through which they share fact-checks and media literacy content. Currently, the majority of Factchequeado fact-checks are produced at the national level by its own staff. Through its U.S. partnerships, Factchequeado aims to train Hispanic journalists to produce original fact-checks in Spanish at the local level.

The Reporters’ Lab conducted this survey with support from the John S. and James L. Knight Foundation, which also has helped fund the Lab’s work on automated fact-checking. The Lab intends to follow up its initial assessment of the local fact-checking landscape with a post-election report that will dive into some of the challenges facing journalists trying to do this vital work. Our follow-up report will explore the content of local fact-checkers’ work in 2022, including data on whom they fact-checked and their approaches to rating claims. We will interview local reporters, producers and editors about public and political feedback and their editorial processes and methodologies. We also intend to examine why some local fact-checking initiatives are short-lived election-year efforts while others have carried on consistently for many years.

Here’s how we decide which fact-checkers to include in the Reporters’ Lab database. The Lab continually collects new information about the fact-checkers it identifies, such as when they launched and how long they last. If you know of a fact-checking project that has been missed, please contact Mark Stencel and Erica Ryan at the Reporters’ Lab.

Joel Luther of the Reporters’ Lab contributed to this report.

Appendix: Local Fact-Checking Projects

Arizona

Arizona Center for Investigative Reporting (Gigafact) | Phoenix

Fact-checking for Repustar’s Gigafact Project by an independent, nonprofit newsroom in Phoenix funded by individual donors, foundations, fee-for-service revenue and other sources. Repustar is a privately-funded benefit corporation based in the San Francisco Bay Area.

PolitiFact Arizona | Phoenix

The Walter Cronkite School of Journalism and Mass Communication at Arizona State University is PolitiFact’s local affiliate in Arizona. PolitiFact previously worked in Arizona with KNXV-TV (ABC15), ABC’s local affiliate in Phoenix, as part of partnership with the station’s owner, Scripps TV Station Group. (KNXV-TV had previously produced its own “Truth Test” segments.) PolitiFact’s national staff maintained the site starting with the 2018 midterm election cycle until the fact-checking organization partnered with ASU in 2022.

California

PolitiFact California | Sacramento

Affiliate of PolitiFact, staffed by reporters at Capital Public Radio.

Sacramento Bee Fact Check | Sacramento

Fact-checks by Sacramento Bee reporters appear in its Capitol Alert section, especially in election years. Began as an “Ad Watch” feature focused on political advertising.

Colorado

9News Truth Test | Denver

NBC’s local TV affiliate in Denver has long done political fact-checking, particularly during elections. In addition, the Tegna-owned station also actively contributes to the Verify initiative — a companywide fact-checking and explanatory journalism project that involves a mix of local stories and national reporting shared across more than 60 stations (https://www.9news.com/verify). 9News relies on funding from advertising and local carriage fees from cable, satellite and digital TV service providers.

CBS4 Reality Check | Denver

Election-year fact-checks from Denver’s local, CBS-owned commercial TV affiliate.

District of Columbia

WUSA9 Verify | Washington

WUSA9 is among the most active contributors in Tegna’s Verify initiative — a companywide fact-checking and explanatory journalism project that involves a mix of local stories and national reporting shared across more than 60 stations. The Washington-area’s CBS affiliate relies on funding from advertising and local carriage fees from cable, satellite and digital TV service providers.

Florida

News4Jax Trust Index | Jacksonville

Fact-checking by the news team at WJXT-TV (News4Jax), an independent commercial TV station in Jacksonville, Florida. News4Jax is owned by the Graham Media Group, a commercial media company whose stations launched their “Trust Index” reporting during the 2020 U.S. elections with help and training from Fathm, a media lab and international consulting group.

News 6 Trust Index | Orlando

Fact-checking by the news team at WKMG-TV (News 6), the CBS affiliate in Orlando, Florida. News 6 is owned by the Graham Media Group, a commercial media company whose regional TV stations launched their “Trust Index” reporting during the 2020 U.S. elections with help and training from Fathm, a media lab and international consulting group.

PolitiFact Florida | St. Petersburg

PolitiFact’s reporting on the state is produced in affiliation with the Tampa Bay Times. The newspaper’s bureau in Washington, D.C., was the fact-checking service’s original home before it was folded into the Poynter Institute — a non-profit media training center in St. Petersburg, Florida, that also owns the Times and its commercial publishing company. From 2010 to 2017, the Miami Herald was also a PolitiFact Florida reporting and distribution partner.

Georgia

11 Alive Verify | Atlanta

WXIA is among the most active contributors in Tegna’s Verify initiative — a companywide fact-checking and explanatory journalism project that involves a mix of local stories and national reporting shared across more than 60 stations. The Atlanta-area’s NBC affiliate relies on funding from advertising and local carriage fees from cable, satellite and digital TV service providers.

Illinois

PolitiFact Illinois | Chicago

Affiliate of PolitiFact, staffed by reporters and researchers from the Better Government Association, a nonprofit watchdog organization founded in 1923 that focuses on investigative journalism. PolitiFact’s previous news partner in the state was Reboot Illinois, a for-profit digital news service.

Iowa

Gazette Fact Checker | Cedar Rapids

Fact-checks by reporters at The Cedar Rapids Gazette. The newspaper previously worked on its fact-checks in collaboration with KCRG-TV, a local TV station the Gazette owned until 2015.

KCCI’s Get the Facts | Des Moines

Fact-checks of campaign ads during election cycles by reporters at the Des Moines, Iowa, CBS affiliate, a commercial station owned by Hearst Television.

KCRG-TV’s “I9 Fact Checker” | Cedar Rapids

Occasional fact-checks presented by commercial station KCRG-TV’s “I9 Investigation” team. The local ABC affiliate in Cedar Rapids was previously owned by the area’s local newspaper, The Cedar Rapids Gazette. The two news organizations worked together on fact-checks from 2014 to 2018.

PolitiFact Iowa | Iowa City

Affiliate of PolitiFact, staffed by reporters at The Daily Iowan, the independent student newspaper at the University of Iowa. PolitiFact’s previous state partner in Iowa was the Des Moines Register.

Maine

Bangor Daily News Ad Watch | Bangor

Fact-checks of campaign ads during election season by staffers at the Bangor daily newspaper.

Portland Press Herald | Portland

Fact-checks of campaign ads during election cycles by staffers at the daily newspaper in Portland, Maine.

Michigan

Bridge Michigan | Detroit

An ongoing reporting project published mainly in election years by Bridge Magazine, an online journal published by the non-profit Center for Michigan. Originally called The Truth Squad, the project began as a standalone site before it merged with the center and its magazine in 2012. The Bridge’s fact-checkers also have collaborated with public media’s Michigan Radio.

Local 4 Trust Index | Detroit

Fact-checking by the news team at WDIV-TV (Local 4), the NBC affiliate for Detroit, Michigan. Local 4 is owned by the Graham Media Group, a commercial media company whose regional TV stations launched their “Trust Index” reporting during the 2020 U.S. elections with help and training from Fathm, a media lab and international consulting group.

PolitiFact Michigan | Detroit

Affiliate of PolitiFact, staffed by reporters from the Detroit Free Press. The newspaper previously did fact-checking on its own during the 2014 midterm elections.

Minnesota

5 Eyewitness News Truth Test | St. Paul

Election season fact-checking by the local ABC affiliate’s political reporter.

CBS Minnesota Reality Check | Minneapolis

Fact-checking by the news staff at the local CBS affiliate in Minneapolis.

Missouri

KY3 Fact Finders | Springfield

Fact-checks by an anchor/reporter for the NBC affiliate in Springfield, Missouri. Focuses on rumors and questions from viewers.

News 4 Fact Check | St. Louis

Election season fact-checks by reporters at CBS’s local affiliate in St. Louis.

Nevada

Reno Gazette-Journal Fact Checker | Reno

Fact-checks by RGJ’s local government reporter and engagement director. The position is supported by donations and grants.

The Nevada Independent (Gigafact) | Las Vegas

Fact-checking for Repustar’s Gigafact Project by a nonprofit news website in Las Vegas funded by corporate donations, memberships, foundation grants and other sources. Repustar is a privately-funded benefit corporation based in the San Francisco Bay Area.

New Mexico

4 Investigates Fact Check | Albuquerque

Occasional fact-checks by the investigative news team at KOB-TV (KOB4), a commercial TV station owned by Hubbard Broadcasting Company that is NBC’s local affiliate in Albuquerque, New Mexico. A reporter conducts the fact-checks with the help of a political scientist from the University of New Mexico.

New York

News10NBC Fact Check | Rochester

Fact-checks by an anchor/reporter at the Rochester, New York, NBC affiliate, that focus on rumors and questions from viewers.

PolitiFact New York | Buffalo

Affiliate of PolitiFact, staffed by reporters from the Buffalo News.

North Carolina

CBS 17 Truth Tracker and Digging Deeper | Raleigh-Goldsboro

Fact-checks by a data reporter from the Raleigh-area’s local CBS affiliate — a commercial TV station owned by Nexstar Media Group. Televised versions of the “Digging Deeper” segments are supplemented with source material on the station’s website, with political “Truth Tracker” reports appearing on its election news page.

PolitiFact North Carolina | Raleigh

Affiliate of PolitiFact, staffed by reporters at WRAL-TV, a privately owned commercial station that is NBC’s local affiliate in the Raleigh-Durham area. The News & Observer, a McClatchy-owned newspaper in Raleigh, was PolitiFact’s original local news partner in the state from 2016 to 2019.

The News & Observer’s Fact-Checking Project | Raleigh

Fact-checks by the reporting staff of The News & Observer, the McClatchy owned newspaper in Raleigh, North Carolina. It freely distributes its fact-checking to other media in the state. The N&O previously did fact-checking as PolitiFact’s state partner from 2016 to 2019.

WCNC Verify | Charlotte

WCNC is among the most active contributors in Tegna’s Verify initiative — a companywide fact-checking and explanatory journalism project that involves a mix of local stories and national reporting shared across more than 60 stations. The Charlotte-area’s NBC affiliate relies on funding from advertising and local carriage fees from cable, satellite and digital TV service providers.

Oklahoma

The Frontier fact checks | Tulsa

Fact-checking by reporters from this non-profit news site based in Tulsa. The fact-checks appear in the form of thematic roundups posted with the site’s other news stories. The Frontiers’ work is also used by other Oklahoma media. The Frontier Media Group Inc. operates the site with support from foundations, corporate supporters and individual donors.

Pennsylvania

News 8 “Ad Watch” | Lancaster

Ad Watch segments appear during election campaigns in televised newscasts and on the politics page of this local, commercially supported TV station. Based in Lancaster, Pennsylvania, WGAL-TV is owned by Hearst Television and is the local NBC affiliate for the Susquehanna Valley region, including the state capital in Harrisburg.

Texas

KHOU11 Verify | Houston

KHOU is among the most active contributors in Tegna’s Verify initiative — a companywide fact-checking and explanatory journalism project that involves a mix of local stories and national reporting shared across more than 60 stations. The Houston-area’s CBS affiliate relies on funding from advertising and local carriage fees from cable, satellite and digital TV service providers.

KPRC Trust Index | Houston

Fact-checking by the news team at KPRC-TV, the NBC affiliate for Houston, Texas. KPRC is owned by the Graham Media Group, a commercial media company whose local TV stations launched their “Trust Index” reporting during the 2020 U.S. elections with help and training from Fathm, a media lab and international consulting group.

KSAT Trust Index | San Antonio

Fact-checking by the news team at KSAT-TV, the ABC affiliate in San Antonio, Texas. KSAT is owned by the Graham Media Group, a commercial media company whose regional TV stations launched their “Trust Index” reporting during the 2020 U.S. elections with help and training from Fathm, a media lab and international consulting group.

PolitiFact Texas | Austin, Houston, San Antonio

Affiliate of PolitiFact, with contributions from its three newspaper partners in the state, Austin American Statesman, Houston Chronicle and San Antonio Express-News.

WFAA’s Verify Road Trip | Dallas

WFAA-TV’s contribution to Tegna’s companywide fact-checking and explanatory journalism project is its “Verify Road Trip” segments. For these stories, the Dallas-area ABC affiliate enlists viewers to be “guest reporters” who join the news team to find answers to their questions. The station relies on funding from advertising and local carriage fees from cable, satellite and digital TV service providers. Verify Road Trip also has a YouTube page.

Virginia

PolitiFact Virginia | Richmond

Staffed by reporters from the news team at WCVE-FM in the Richmond/Petersburg area, where the station is part of a cluster of regional public broadcasters. WCVE revived PolitiFact’s presence in the commonwealth after a nearly two-year hiatus. (PolitiFact’s original local news partner, the Richmond Times Dispatch, operated the Virginia site from 2010 to 2016.)

West Virginia

PolitiFact West Virginia | Morgantown

Affiliate of PolitiFact, staffed by student reporters at West Virginia University’s Reed College of Media.

Wisconsin

News 3 Reality Check | Madison

Video fact-checking segments by News 3 team on Wisconsin politics and TV ads, especially during election season.

PolitiFact Wisconsin | Milwaukee

Affiliate of PolitiFact, staffed by reporters from the Milwaukee Journal Sentinel.

Wisconsin Watch (Gigafact) | Madison

Fact-checking for Repustar’s Gigafact Project by a nonprofit news outlet in Wisconsin funded by grants from foundations, individual and corporate donations and other sources. Repustar is a privately-funded benefit corporation based in the San Francisco Bay Area.

PolitiFact at 15: Lessons about innovation, the Truth-O-Meter and pirates

Yes, the Truth-O-Meter is a gimmick. But 15 years later, it's still effective. Just don't look for "Barely True."

By Bill Adair - August 22, 2022

Fifteen years ago, I worked with a small group of reporters and editors at the St. Petersburg Times (now the Tampa Bay Times) to start something bold: a fact-checking website that called out politicians for being liars.

That concept was too gutsy for the Times political editor. Sure, he liked the idea, he said at a meeting of editors, “but I want nothing to do with it.”

That was my first lesson that PolitiFact was going to disrupt the status quo, especially for political journalists. Back then, most of them were timid about calling out lies by politicians. They were afraid fact-checking would displease the elected officials they covered. I understood his reluctance because I had been a political reporter for many years. But after watching the lying grow in the early days of the internet, I felt it was time for us to change our approach.

Today, some political reporters have developed more courage, but many still won’t call out the falsehoods they hear. PolitiFact does. So I’m proud it’s going strong.

It’s now owned by the Poynter Institute, and it has evolved with the times. As a proud parent, allow me to brag: PolitiFact has published more than 22,000 fact-checks, won a Pulitzer Prize and sparked a global movement for political fact-checking. Pretty good for a journalism org that’s not even old enough to drive.

On PolitiFact’s 15th birthday, I thought it would be useful to share the lesson about disruption and a few others from my unusual journey through American political journalism. Among them:

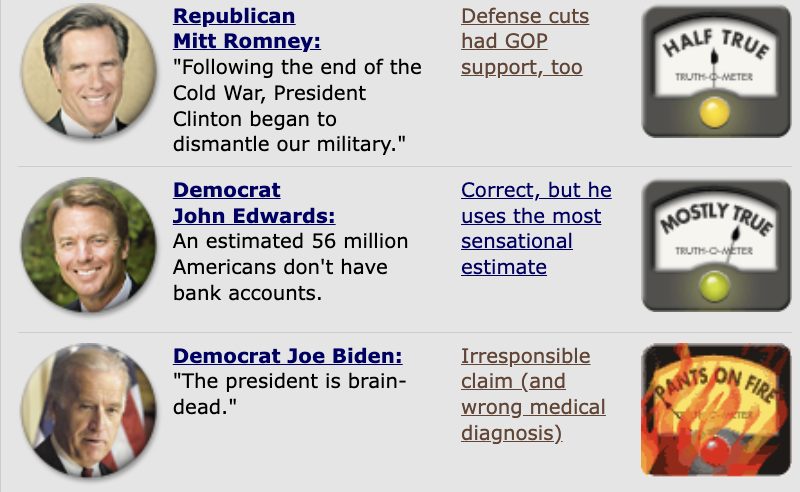

Gimmicks are good

The Truth-O-Meter — loved by many and loathed by some — is at times derided as a mere gimmick. I used to bristle at that word. Now I’m fine with it.

My friend Brooks Jackson, the co-founder of FactCheck.org, often teased me about the meter. That teasing culminated in a farewell essay that criticized “inflexible rating systems” like our meter because they were too subjective.

I agree with Brooks to an extent. Summarizing a complex fact-check to a rating such as Half True is subjective. But it’s a tremendous service to readers who may not want to read a 1,000-word fact-check article. What’s more, while it relies on the judgment of the journalists, it’s not as subjective as some people think. Each fact-check is thoroughly researched and documented, and PolitiFact has a detailed methodology for its ratings.

Yes, the Truth-O-Meter is a gimmick! (I once got recognized in an airport by a lady who had seen me on TV and said, “You’re the Truth-O-Meter guy!”) But its ratings are the product of PolitiFact’s thorough and transparent journalism. It’s a gimmick with substance.

Empower the pirates

I was the founding editor, the guy with the initial ideas and some terrible sketches (my first design had an ugly rendering of the meter with “Kinda True” scribbled above). But the editors at the Tampa Bay Times believed in the idea enough to assign other staffers who had actual talent, including a spirited data journalist named Matt Waite and a marvelous designer named Martin Frobisher.

Times Executive Editor Neil Brown, now president of Poynter, gave us freedom. He cut me loose from my duties as Washington bureau chief so I could write sample fact-checks. Waite and Frobisher were allowed to build a website outside the infrastructure of the Times website so we had a fresh look and more flexibility to grow.

We were like a band of pirates, empowered to be creative. We were free of the gravitational pull of the Times, and not bound by its rules and conventions. That gave us a powerful spirit that infused everything we did.

Design is as important as content

We created PolitiFact at a time when political journalism, even on the web, was just words or pictures. But we spent as much energy on the design as on the journalism.

It was 2007 and we were an American newspaper, so our team didn’t have a lot of experience or resources. But we realized that we could use the design to help explain our unique journalism. The main section of our homepage had a simple look — the face of the politician being checked (in the style of a campaign button), the statement the politician made and the Truth-O-Meter showing the rating they earned. We also created report cards so readers could see tallies that revealed how many True, Half True, False ratings, or whatever a politician had earned.

The design not only guided readers to our fact-check articles, it told the story as much as the words.

Twitter is not real life

My occasional bad days as editor always seemed more miserable because of Twitter. If we made an error or just got attacked by a partisan group, it showed up first and worst on Twitter.

I stewed over that. Twitter made it seem like the whole world hated us. The platform doesn’t foster a lot of nuance. You’re loved or hated. I got so caught up in it that when I left the office to go to lunch, I’d look around and have irrational thoughts about whether everyone had been reading the tweets and thought I was an idiot.

But then when I went out with friends or talked with my family, I realized that real people don’t use Twitter. It’s largely a platform for journalists and the most passionate (read: angry) political operatives. My friends and family never saw the attacks on us, nor would they care if they did.

So when the talk on Twitter turned nasty (which was often), I would remind our staff: Twitter is not real life.

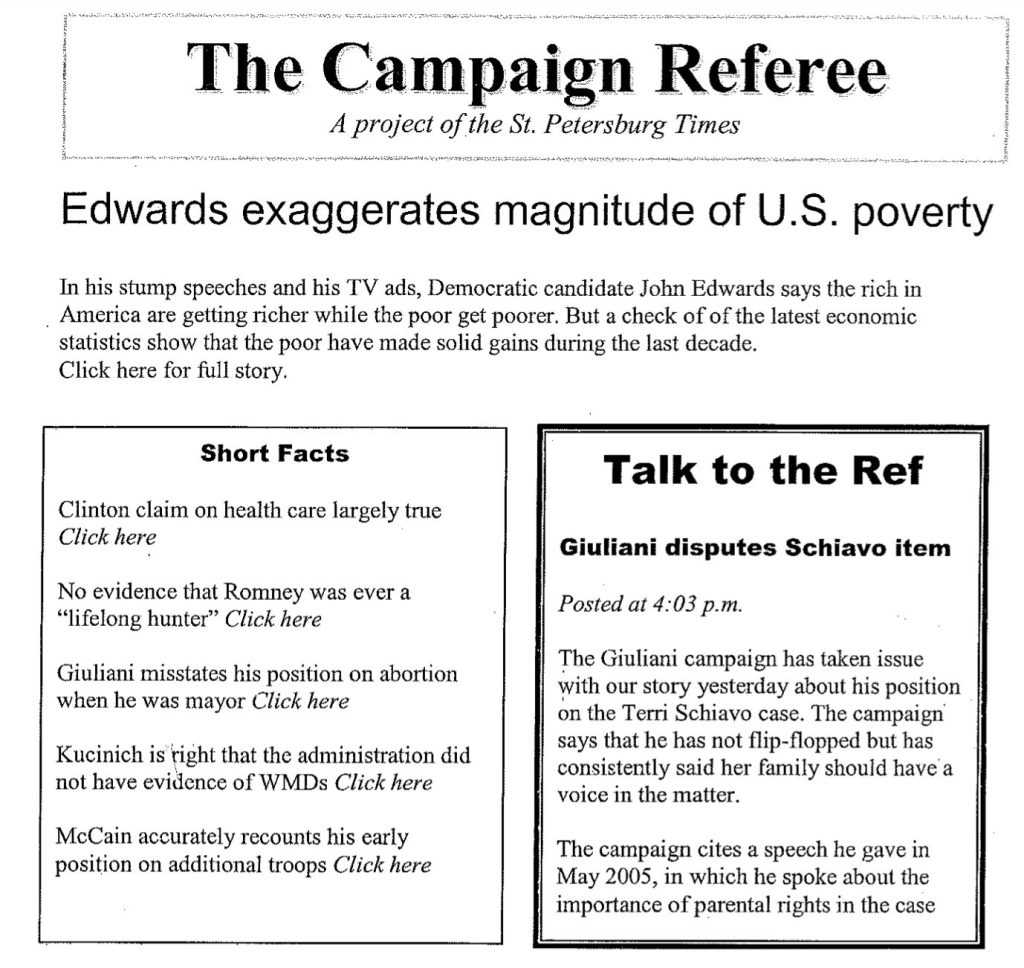

People hate referees

My initial sketch of the website was called “The Campaign Referee” because I thought it was a good metaphor for our work: We were calling the fouls in a rough and tumble sport. But Times editors vetoed that name… and I soon saw why.

People hate referees! On many days, it seemed PolitiFact made everyone mad!

That phenomenon became clearer in 2013 when I stepped down as editor and came to Duke as a journalism professor. I became a Duke basketball fan and quickly noticed the shoddy work of the referees in the Atlantic Coast Conference. THEY ARE SO UNFAIR! Their calls always favor the University of North Carolina! What’s the deal? Did all the refs attend UNC.

Seek inspiration in unlikely places

When we expanded PolitiFact to the states (PolitiFact Wisconsin, PolitiFact Florida, etc.), our model was similar to fast-food franchises. We licensed our brand to local newspapers and TV and radio stations and let them do their own fact-checks using our Truth-O-Meter.

That was risky. We were allowing other news organizations to use our name and methods. If they did shoddy work, it would damage our brand. But how could we protect ourselves?

I got inspiration from McDonald’s and Subway. I assigned one of our interns to write a report about how those companies ensured quality as they franchised. The answers: training sessions, manuals that clearly described how to consistently make the Big Macs and sandwiches, and quality control inspectors.

We followed each recommendation. I conducted detailed training sessions for the new fact-checkers in each town and then checked the quality by taking part in the editing and ratings for several weeks.

I gave every fact-checker “The Truth-O-Meter Owner’s Manual,” a detailed guide to our journalism that reflected our lighthearted spirit (It began: “Congratulations on your purchase of a Truth-O-Meter! If operated and maintained properly, your Truth-O-Meter will give you years of enjoyment! But be careful because incorrect operation can cause an unsafe situation.”)

Adjust to complaints and dump the duds

We made adjustments. We had envisioned Pants on Fire as a joke rating (the first one was on a Joe Biden claim that President Bush was brain-dead), but readers liked the rating so much that we decided to use it on all claims that were ridiculously false. (There were a lot!)

In the meantime, though, we lost enthusiasm for the animated GIF for Pants on Fire. The burning Truth-O-Meter was amusing the first few times you saw it, but then … it was too much. Pants on Fire is now a static image.

As good as our design was, one section on the home page called the Attack File was too confusing. It showed the person making the attack as well as the individual being attacked. But readers didn’t grasp what we were doing. We 86’d the Attack File.

Initially, the rating between Half True and False was called Barely True, but many people didn’t understand it – and the National Republican Congressional Committee once distorted it. When the NRCC earned a Barely True, the group boasted in a news release, “POLITIFACT OHIO SAYS TRUE.”

Um, no. We changed the rating to Mostly False. We also rated the NRCC’s news release. This time: Pants on Fire!

Fact-checkers extend their global reach with 391 outlets, but growth has slowed

Efforts to intercept misinformation are expanding in more than 100 countries, but the pace of new fact-checking projects continues to slow.

By Mark Stencel, Erica Ryan and - June 17, 2022

The number of fact-checkers around the world doubled over the past six years, with nearly 400 teams of journalists and researchers taking on political lies, hoaxes and other forms of misinformation in 105 countries.

The Duke Reporters’ Lab annual fact-checking census counted 391 fact-checking projects that were active in 2021. Of those, 378 are operating now.

That’s up from a revised count of 186 active sites in 2016 – the year when the Brexit vote and the U.S. presidential election elevated global concerns about the spread of inaccurate information and rumors, especially in digital media. Misleading posts about ethnic conflicts, wars, the climate and the pandemic only amplified those worries in the years since.

Since last year’s census, we have added 51 sites to our global fact-checking map and database. In that same 12 months, another seven fact-checkers closed down.

While this vital journalism now appears in at least 69 languages on six continents, the pace of growth in the international fact-checking community has slowed over the past several years.

The largest growth was in 2019, when 77 new fact-checking sites and organizations made their debut. Based on our updated counts since then, the number was 58 in 2020 and 22 last year.

New Fact Checkers by Year

(Note: The adjusted number of 2021 launches may increase over time as the Reporters’ Lab identifies other fact-checkers we have not yet discovered.)

These numbers may be a worrisome trend, or they could mean that the growth of the past several years has saturated the market – or paused in the wake of the global pandemic. But we also expect our numbers for last year to eventually increase as we continue to identify other fact-checkers, as happens every year.

More than a third of the growth since 2019’s bumper crop came from existing fact-checking operations that added new outlets to expand their reach to new places and different audiences. That includes Agence France-Presse, the French international news service, which launched at least 17 new sites in that period. In Africa, Dubawa and PesaCheck opened nine new bureaus, while Asia’s Boom and Fact Crescendo opened five. In addition, Delfi and Pagella Politica in Europe and PolitiFact in North America each launched a new satellite, too.

Fact-checking has expanded quickly over the years in Latin America, but less so of late. Since 2019 we saw three launches in South America (one of which has folded) plus one more focused on Cuba.

Active Fact-Checkers by Year

The Reporters’ Lab is monitoring another trend: fact-checkers’ use of rating systems. These ratings are designed to succinctly summarize a fact-checker’s conclusions about political statements and other forms of potential misinformation. When we analyzed the use of these features in past reports, we found that about 80-90% of the fact-checkers we looked at relied on these meters and standardized labels to prominently convey their findings.

But that approach appears to be less common among newer fact-checkers. Our initial review of the fact-checkers that launched in 2020 found that less than half seemed to be using rating systems. And among the Class of 2021, only a third seemed to rely on predefined ratings.

We also have seen established fact-checkers change their approach in handling ratings.

The Norwegian fact-checking site Faktisk, for instance, launched in 2017 with a five-point, color-coded rating system that was similar to ones used by most of the fact-checkers we monitor: “helt sant” (for “absolutely true” in green) to “helt feil” (“completely false” in red). But during a recent redesign, Faktisk phased out its ratings.

“The decision to move away from the traditional scale was hard and subject to a very long discussion and consideration within the team,” said editor-in-chief Kristoffer Egeberg in an email. “Many felt that a rigid system where conclusions had to ‘fit the glove’ became kind of a straitjacket, causing us to either drop claims that weren’t precise enough or too complex to fit into one fixed conclusion, or to instead of doing the fact-check – simply write a fact-story instead, where a rating was not needed.”

Egeberg also noted that sometimes “the color of the ratings became the main focus rather than the claim and conclusion itself, derailing the important discussion about the facts.”

We plan to examine this trend in the future and expect this discussion may emerge during the conversations at the annual Global Fact summit in Oslo, Norway, next week.

The Duke Reporters’ Lab began keeping track of the international fact-checking community in 2014, when it organized a group of about 50 people who gathered in London for what became the first Global Fact meeting. This year about 10-times that many people – 500 journalists, technologists, truth advocates and academics – are expected to attend the ninth summit. The conferences are now organized by the International Fact-Checking Network, based at the Poynter Institute in St. Petersburg, Florida. This will be the group’s first large in-person meeting in three years.

Fact-Checkers by Continent

Like their audiences, the fact-checkers are a multilingual community, and many of these sites publish their findings in multiple languages, either on the same site or in some cases alternate sites. English is the most common, used on at least 166 sites, followed by Spanish (55), French (36), Arabic (14), Portuguese (13), Korean (13), German (12) and Hindi (11).

Nearly two-third of the fact-checkers are affiliated with media organizations (226 out of 378, or about 60%). But there are other affiliations and business models too, including 24 with academic ties and 45 that are part of a larger nonprofit or non-governmental organization. Some of these fact-checkers have overlapping arrangements with multiple organizations. More than a fifth of the community (86 out of 378) operate independently.

About the census:

Here’s how we decide which fact-checkers to include in the Reporters’ Lab database. The Lab continually collects new information about the fact-checkers it identifies, such as when they launched and how long they last. That’s why the updated numbers for earlier years in this report are higher than the counts the Lab included in earlier reports. If you have questions, updates or additions, please contact Mark Stencel, Erica Ryan or Joel Luther.

Related links: Previous fact-checking census reports

MediaReview: A next step in solving the misinformation crisis

An update on what we’ve learned from 1,156 entries of MediaReview, our latest collaboration to combat misinformation.

By - June 2, 2022

When a 2019 video went viral after being edited to make House Speaker Nancy Pelosi look inebriated, it took 32 hours for one of Facebook’s independent fact-checking partners to rate the clip false. By then, the video had amassed 2.2 million views, 45,000 shares, and 23,000 comments – many of them calling her “drunk” or “a babbling mess.”

The year before, the Trump White House circulated a video that was edited to make CNN’s Jim Acosta appear to aggressively react to a mic-wielding intern during a presidential press conference.

A string of high-profile misleading videos like these in the run-up to the 2020 U.S. election stoked long-feared concerns about skillfully manipulated videos, sometimes using AI. The main worry then was how fast these doctored videos would become the next battleground in a global war against misinformation. But new research by the Duke Reporters’ Lab and a group of participating fact-checking organizations in 22 countries found that other, far less sophisticated forms of media manipulation were much more prevalent.

By using a unified tagging system called MediaReview, the Reporters’ Lab and 43 fact-checking partners collected and categorized more than 1,000 fact-checks based on manipulated media content. Those accumulated fact-checks revealed that:

- While we began this process in 2019 expecting deepfakes and other sophisticated media manipulation tactics to be the most imminent threat, we’ve predominantly seen low-budget “cheap fakes.” The vast majority of media-based misinformation is rated “Missing Context,” or, as we’ve defined it, “presenting unaltered media in an inaccurate manner.” In total, fact-checkers have applied the Missing Context rating to 56% of the MediaReview entries they’ve created.

- Most of the fact-checks in our dataset, 78%, come from content on Meta’s platforms Facebook and Instagram, likely driven by the company’s well-funded Third-Party Fact Checking-Program. These platforms are also more likely to label or remove fact-checked content. More than 80% of fact-checked posts on Instagram and Facebook are either labeled to add context or no longer on the platform. In contrast, more than 60% of fact-checked posts on YouTube and Twitter remain intact, without labeling to indicate their accuracy.

- Without reliable tools for archiving manipulated material that is removed or deleted, it is challenging for fact-checkers to track trends and bad actors. Fact-checkers used a variety of tools, such as the Internet Archive’s Wayback Machine, to attempt to capture this ephemeral misinformation; but only 67% of submitted archive links were viewable on the chosen archive when accessed at a later date, while 33% were not.

The Reporters’ Lab research also demonstrated MediaReview’s potential — especially based on the willingness and enthusiastic participation of the fact-checking community. With the right incentives for participating fact-checkers, MediaReview provides efficient new ways to help intercept manipulated media content — in large part because so many variations of the same claims appear repeatedly around the world, as the pandemic has continuously demonstrated.

The Reporters’ Lab began developing the MediaReview tagging system around the time of the Pelosi video, when Google and Facebook separately asked the Duke team to explore possible tools to fight the looming media misinformation crisis.

MediaReview is a sibling to ClaimReview, an initiative the Reporters’ Lab led starting in 2015, that sought to create infrastructure for fact-checkers to make their articles machine-readable and easily used for search engines, mobile apps, and other projects. Called “one of the most successful ‘structured journalism’ projects ever launched,” the ClaimReview schema has proven immensely valuable. Used by 177 fact-checking organizations around the world, ClaimReview has been used to tag 136,744 articles, establishing a large and valuable corpus of fact-checks: tens of thousands of statements from politicians and social media accounts around the world analyzed and rated by independent journalists.

But ClaimReview proved insufficient to address the new, specific challenges presented by misinformation spread through multimedia. Thus, in September 2019, the Duke Reporters’ Lab began working with the major search engines, social media services, fact-checkers and other interested stakeholders on an open process to develop MediaReview, a new sibling of ClaimReview that creates a standard for manipulated video and images. Throughout pre-launch testing phases, 43 fact-checking outlets have used MediaReview to tag 1,156 images and videos, again providing valuable, structured information about whether pieces of content are legitimate and how they may have been manipulated.

In an age of misinformation, MediaReview, like ClaimReview before it, offers something vital: real-time data on which pieces of media are truthful and which ones are not, as verified by the world’s fact-checking journalists.

But the work of MediaReview is not done. New fact-checkers must be brought on board in order to reflect the diversity and global reach of the fact-checking community, the major search and social media services must incentivize the creation and proper use of MediaReview, and more of those tech platforms and other researchers need to learn about, and make full use of, the opportunities this new tagging system can provide.

An Open Process

MediaReview is the product of a two-year international effort to get input from the fact-checking community and other stakeholders. It was first adapted from a guide to manipulated video published by The Washington Post, which was initially presented at a Duke Tech & Check meeting in the spring of 2019. The Reporters’ Lab worked with Facebook, Google, YouTube, Schema.org, the International Fact-Checking Network, and The Washington Post to expand this guide to include a similar taxonomy for manipulated images.

The global fact-checking community has been intimately involved in the process of developing MediaReview. Since the beginning of the process, the Reporters’ Lab has shared all working drafts with fact-checkers and has solicited feedback and comments at every step. We and our partners have also presented to the fact-checking community several times, including at the Trusted Media Summit in 2019, a fact-checkers’ community meeting in 2020, Global Fact 7 in 2020, Global Fact 8 in 2021 and several open “office hours” sessions with the sole intent of gathering feedback.

Throughout development and testing, the Reporters’ Lab held extensive technical discussions with Schema.org to properly validate the proposed structure and terminology of MediaReview, and solicited additional feedback from third-party organizations working in similar spaces, including the Partnership on AI, Witness, Meedan and Storyful.

Analysis of the First 1,156

As of February 1, 2022, fact-checkers from 43 outlets spanning 22 countries have now made 1,156 MediaReview entries.

Number of outlets creating MediaReview by country.

Number of MediaReview entries created by outlet.

Our biggest lesson in reviewing these entries: The way misinformation is conveyed most often through multimedia is not what we expected. We began this process in 2019 expecting deepfakes and other sophisticated media manipulation tactics to be an imminent threat, but we’ve predominantly seen low-budget “cheap fakes.” What we’ve seen consistently throughout testing is that the vast majority of media-based misinformation is rated “Missing Context,” or, as we’ve defined it, “presenting unaltered media in an inaccurate manner.” In total, fact-checkers have applied the Missing Context rating to 56% of the MediaReview entries they’ve created.

The “Original” rating has been the second most applied, accounting for 20% of the MediaReview entries created. As we’ve heard from fact-checkers through our open feedback process, a substantial portion of the media being fact-checked is not manipulated at all; rather, it consists of original videos of people making false claims. Going forward, we know we need to be clear about the use of the “Original” rating as we help more fact-checkers get started with MediaReview, and we need to continue to emphasize the use of ClaimReview to counter the false claims contained in these kinds of videos.

Throughout the testing process, the Duke Reporters’ Lab has monitored incoming MediaReview entries and provided feedback to fact-checkers where applicable. We’ve heard from fact-checkers that that feedback was valuable and helped clarify the rating system.

Reviewing media links that have been checked by third-party fact-checkers, a vast majority of fact-checked media thus far exists on Facebook:

Share of links in the MediaReview dataset by platform.

Facebook’s well-funded Third Party Fact-Checking Program likely contributes to this rate; fact-checkers are paid directly to check content on Facebook’s platforms, making that content more prevalent in our dataset.

We also reviewed the current status of links checked by fact-checkers and tagged with MediaReview. With different platforms having different policies on how they deal with misinformation, some of the original posts are intact, others have been removed by either the platform or the user, and some have a context label appended with additional fact-check information. By platform, Instagram is the most likely to append additional information, while YouTube is the most likely to present fact-checked content in its original, intact form, not annotated with any fact-checking information: 72.5% of the media checked from YouTube are still available in their original format on the platform.

Status of fact-checked media broken down by platform, showing the percentage of checked media either labeled with additional context, removed, or presented fully intact.

In addition, we noted that fact-checkers have often (roughly 25% of the time) input an archival link into the “Media URL” field, in an attempt to capture the link for the video or image, ephemeral misinformation that is often quickly deleted by either the platforms or the users. Notably, though, these existing archive systems are unreliable; only 67% of submitted archive links were viewable on the archive, while 33% were not. While we found that Perma.cc was the most reliable existing archiving system used by fact-checkers, it only successfully presented 80% of checked media, and its status as a paid archival tool leaves an opportunity to build a new system to preserve fact-checked media.

Success rate of archival tools used by fact-checkers in properly displaying the fact-checked media.

Next Steps

Putting MediaReview to use: Fact-checkers have emphasized to us the need for social media companies and search engines platforms to make use of these new signals. They’ve highlighted that usability testing would help ensure that MediaReview data was seen prominently on the tech platforms.

Archiving the images and videos: As noted above, current archiving systems are insufficient to capture the media misinformation fact-checkers are reporting on. Currently, fact-checkers using MediaReview are limited to quoting or describing the video or image they checked and including the URL where they discovered it. There’s no easy, consistent workflow for preserving the content itself. Manipulated images and videos are often removed by social media platforms or deleted or altered by their owners, leaving no record of how they were manipulated or presented out of context. In addition, if the same video or image emerges again in the future, it can be difficult to determine if it has been previously fact-checked. A repository of this content — which could be saved automatically as part of each MediaReview submission — would allow for accessibility and long-term durability for archiving, research, and more rapid detection of misleading images and video.

Making more: We continue to believe that fact-checkers need incentives to continue making this data. The more fact-checkers use these schemas, the more we increase our understanding of the patterns and spread of misinformation around the world — and the ability to intercept inaccurate and sometimes dangerous content. The effort required to produce ClaimReview or MediaReview is relatively low, but adds up cumulatively — especially for smaller teams with limited technological resources.

While fact-checkers created the first 1,156 entries solely to help the community refine and test the schema, further use by the fact-checkers must be encouraged by the tech platforms’ willingness to adopt and utilize the data. Currently, 31% of the links in our MediaReview dataset are still fully intact where they were first posted; they have not been removed or had any additional context added. Fact-checkers have displayed their eagerness to research manipulated media, publish detailed articles assessing their veracity, and make their assessments available to the platforms to help curb the tide of misinformation. Search engines and social media companies must now decide to use and display these signals.

Appendix: MediaReview Development Timeline

MediaReview is the product of a two-year international effort involving the Duke Reporters’ Lab, the fact-checking community, the tech platforms and other stakeholders.

Mar 28, 2019

Phoebe Connelly and Nadine Ajaka of The Washington Post first presented their idea for a taxonomy classifying manipulated video at a Duke Tech & Check meeting.

Sep 17, 2019

The Reporters’ Lab met with Facebook, Google, YouTube, Schema.org, the International Fact-Checking Network, and The Washington Post in New York to plan to expand this guide to include a similar taxonomy for manipulated images.

Oct 17, 2019

The Reporters’ Lab emailed a first draft of the new taxonomy to all signatories of the IFCN’s Code of Principles and asked for comments.

Nov 26, 2019

After incorporating suggestions from the first draft document and generating a proposal for Schema.org, we began to test MediaReview for a selection of fact-checks of images and videos. Our internal testing helped refine the draft of the Schema proposal, and we shared an updated version with IFCN signatories on November 26.

Jan 30, 2020

The Duke Reporters’ Lab, IFCN and Google hosted a Fact-Checkers Community Meeting at the offices of The Washington Post. Forty-six people, representing 21 fact-checking outlets and 15 countries, attended. We presented slides about MediaReview, asked fact-checkers to test the creation process on their own, and again asked for feedback from those in attendance.

Apr 16, 2020

The Reporters’ Lab began a testing process with three of the most prominent fact-checkers in the United States: FactCheck.org, PolitiFact, and The Washington Post. We have publicly shared their test MediaReview entries, now totaling 421, throughout the testing process.

Jun 1, 2020

We wrote and circulated a document summarizing the remaining development issues with MediaReview, including new issues we had discovered through our first phase of testing. We also proposed new Media Types for “image macro” and “audio,” and new associated ratings, and circulated those in a document as well. We published links to both of these documents on the Reporters’ Lab site (We want your feedback on the MediaReview tagging system) and published a short explainer detailing the basics of MediaReview (What is MediaReview?)

Jun 23, 2020

We again presented on MediaReview at Global Fact 7 in June 2020, detailing our efforts so far and again asking for feedback on our new proposed media types and ratings and our Feedback and Discussion document. The YouTube video of that session has been viewed over 500 times, by fact-checkers around the globe, and dozens participated in the live chat.

Apr 1, 2021

We hosted another session on MediaReview for IFCN signatories on April 1, 2021, again seeking feedback and updating fact-checkers on our plans to further test the Schema proposal.

Jun 3, 2021

In June 2021, the Reporters’ Lab worked with Google to add MediaReview fields to the Fact Check Markup Tool and expand testing to a global userbase. We regularly monitored MediaReview and maintained regular communication with fact-checkers who were testing the new schema.

Nov 10, 2021

We held an open feedback session with fact-checkers on November 10, 2021, providing the community another chance to refine the schema. Overall, fact-checkers have told us that they’re pleased with the process of creating MediaReview and that its similarity to ClaimReview makes it easy to use. As of February 1, 2022, fact-checkers have made a total of 1,156 MediaReview entries.

For more information about MediaReview, contact Joel Luther.

From Sofia to Springfield, fact-checking extends its reach

Reporters' Lab prepares for its annual fact-checking survey in advance of the Global Fact summit in Oslo

By Erica Ryan - April 19, 2022

Fact-checkers in the Gambia and Bulgaria are among the new additions to the Duke Reporters’ Lab database of fact-checking sites around the world. The total now stands at 356, with more updates to come.

FactCheck Gambia and Factcheck.bg both got their start in 2021. Fact-checkers at the Gambian site checked claims in the run-up to the country’s presidential elections in December, and they have continued to examine President Adama Barrow’s inaugural speech as well as other statements from officials and social media claims.

In Bulgaria, the initiative begun by the nonprofit Association of European Journalists-Bulgaria has checked claims ranging from a purported ban on microwaves to concerns about coronavirus vaccines, and it has received increased interest for its work during the war in Ukraine.

Other additions to the database include a couple of TV news features in the United States: the News10NBC Fact Check from WHEC-TV in Rochester, New York, and the KY3 Fact Finders at KYTV-TV in Springfield, Missouri. Both projects focus on rumors and questions from local television viewers.

This is crunch time for the Reporters’ Lab. We’re busily updating our database for the Lab’s annual fact-checking census. We plan to publish this yearly overview in June, shortly before the International Fact-Checking Network convenes its annual Global Fact summit in Oslo.

About the census: Here’s how we decide which fact-checkers to include in the Reporters’ Lab database. The Lab continually collects new information about the fact-checkers it identifies, such as when they launched and how long they last. If you have questions, updates or additions, please contact Lab co-director Mark Stencel (mark.stencel@duke.edu) and project manager Erica Ryan (elryan@gmail.com).

Reporters’ Lab Takes Part in Eighth ‘Global Fact’ Summit

The Reporters’ Lab team participated in five conference sessions and hosted a daily virtual networking table at the conference with more than 1,000 attendees.

By - November 8, 2021

The Duke Reporters’ Lab spent this year’s eighth Global Fact conference helping the world’s fact-checkers learn more about tagging systems that can extend the reach of their work; encouraging a sense of community among organizations around the globe; and discussing new research that offers potent insights into how fact-checkers do their jobs.

This year’s Global Fact took place virtually for the second time, following years of meeting in person all around the world, in cities such as London, Buenos Aires, Madrid, Rome, and Cape Town. More than 1,000 fact-checkers, academic researchers, industry experts, and representatives from technology companies attended the virtual conference.

Over three days, the Reporters’ Lab team participated in five conference sessions and hosted a daily virtual networking table.

- Reporters’ Lab director and IFCN co-founder Bill Adair delivered opening remarks for the conference, focused on how fact-checkers around the world have closely collaborated in recent years.

- Mark Stencel, co-director of the Reporters’ Lab, moderated the featured talk with Tom Rosenstiel, the Eleanor Merrill Visiting Professor on the Future of Journalism at the Philip Merrill College of Journalism at the University of Maryland and coauthor of The Elements of Journalism. Rosenstiel previously served as executive director of the American Press Institute. He discussed research into how the public responds to the core values of journalism and how fact-checkers might be able to build more trust with their audience.

- Thomas Van Damme presented findings from his master’s thesis, “Global Trends in Fact-Checking: A Data-Driven Analysis of ClaimReview,” during a panel discussion moderated by Lucas Graves and featuring Joel Luther of the Reporters’ Lab and Karen Rebelo, a fact-checker from BOOM in India. Van Damme’s analysis reveals fascinating trends from five years of ClaimReview data and demonstrates ClaimReview’s usefulness for academic research.

- Luther also prepared two pre-recorded webinars that were available throughout the conference:

- Introduction to ClaimReview: the Hidden Infrastructure of Fact-Checks — This session was designed for newcomers to ClaimReview but includes some helpful tips and information for everyone using ClaimReview for their fact-checks.

- MediaReview: A Sibling to ClaimReview to Tag Manipulated Media — This video offers an overview of how to tag images and videos, as well as some findings from the MediaReview testing we’ve done so far.

In addition, the Reporters’ Lab is excited to reconnect with fact-checkers again at 8 a.m. Eastern on Wednesday, November 10, for a feedback session on MediaReview. We’re pleased to report that fact-checkers have now used MediaReview to tag their fact-checks of images and videos 841 times, and we’re eager to hear any additional feedback and continue the open development process we began in 2019 in close collaboration with the IFCN.

Keeping a sense of community in the IFCN

For Global Fact 8, a reminder of the IFCN's important role bringing fact-checkers together.

By Bill Adair - October 20, 2021

My opening remarks for Global Fact 8 on Oct. 20, 2021, delivered for the second consecutive year from Oslo, Norway.

Thanks, Baybars!

Welcome to Norway!

(Pants on Fire!)

It’s great to be here once again among your fiords and gnomes and your great Norwegian meatballs!

(Pants on Fire!)

What….I’m not in Norway?

Well, it turns out I’m still in Durham…again!

And once again we are joined together through the magic of video and more importantly by our strong sense of community. That’s the theme of my remarks today.

Seven years ago, a bunch of us crammed into a classroom in London. I had organized the conference with Poynter because I had heard from several of you that there was a desire for us to come together. It was a magical experience that we all had in the London School of Economics. We were able to discuss our common experiences and challenges.

As I noted in a past speech, one of our early supporters, Tom Glaisyer of the Democracy Fund, gave us some critical advice when I was planning the meeting with the folks at Poynter. Tom said, “Build a community, not an association.” His point was that we should be open and welcoming and that we shouldn’t erect barriers about who could take part in our group. That’s been an important principle in the IFCN and one that’s been possible with Poynter as our home.

You can see the community every week in our email listserv. Have you looked at some of those threads? Lately they’ve helped fact-checkers find the status of COVID passports in countries around the world, learn which countries allow indoor dining and which were still in lockdown. All of that is possible because of the wonderful way we help each other.

Global Fact keeps getting bigger and bigger. It was so big in Cape Town that we needed a drone to take our group photo. At this rate, for our next-get-together, we’ll need to take the group photo from a satellite.

Tom’s advice has served us really well. By establishing the IFCN as a program within the Poynter Institute, a globally renowned journalism organization, we have not only built a community, we avoided the bureaucracy and frustration of creating a whole new organization.

We stood up the IFCN quickly, and it became a wonderfully global organization, with a staff and advisory board that represents a mix of fact-checkers from every continent — except for Antarctica (at least not yet!).

Our community succeeded in creating a common Code of Principles that may well be the only ethical framework in journalism that includes a real verification and enforcement mechanism.

The Poynter-based IFCN, with its many connections in journalism and tech, has raised millions of dollars for fact-checkers all over the world.

And we have done all this without bloated overhead, new legal entities and insular meetings that would distract us from our real work — finding facts and dispelling bullshit. For most fact-checkers, running our own organizations or struggling for resources within our newsrooms is already time-consuming enough.

As we look to the future, some fact-checkers from around the world have offered ideas at how the IFCN can improve. I like many of their suggestions.

Let’s start with the money the IFCN distributes. The fundraising I mentioned is amazing — more than $3 million since March 2020. It’s pretty cool how that gets distributed – All of that money came from major tech companies in the United States and 84% of the money goes to fact-checkers OUTSIDE the US.

But we can be even more transparent about all of that, just as IFCN’s principles demand transparency of its signatories. We can also continue to expand the advisory board to be even more representative of our growing community.

Some other improvements:

We should demand more data and transparency from our funders in the tech community. Fact-checkers also can advocate to make sure that our large tech partners treat members of our community fairly. And we can work together more closely to find new sources of revenue to pay for our work, whether that’s through IFCN or other collaborations.

One possible way is to arrange a system so fact-checkers can get paid for publishing fact-checks with ClaimReview, the tagging system that our Reporters’ Lab developed with Google and Jigsaw. (A bit of our own transparency – they supported our work on that and a similar product for images and video called MediaReview.) Our goal at Duke is to help fact-checkers expand their audiences and create new ways for you to get paid for your important work.

Our community also needs more diverse funding sources, to avoid relying too heavily on any one company or sector. But we also need to be realistic and recognize the financial and legal limitations of the funders, and of our fact-checkers, which represent an incredibly wide range of business models. Some of you have good ideas about that. And we should be talking more about all of that.

The IFCN and Global Fact provide essential venues for us to discuss these issues and make progress together – as do the regional fact-checking collaboratives and networks, from Latin America to Central Europe to Africa, and the numerous country-specific collaborations in Japan, Indonesia and elsewhere. What a dazzling movement we have built – together.

If there’s a message in all this is that all of us need to convene and talk more often. The pandemic has made that difficult. This is the second year we have to meet virtually — and like most of you, I too am sick of talking to my laptop, as I am now.

For now, though, let’s be grateful for the community we have. It’s sunny here in Norway today

[Pants on Fire!]

I’m looking forward to seeing you in person next year!

The lessons of Squash, our groundbreaking automated fact-checking platform

Squash fulfilled our dream of instant checks on speeches and debates. But to work at scale, we need more fact-checks.

By Bill Adair - June 28, 2021

Squash began as a crazy dream.

Soon after I started PolitiFact in 2007, readers began suggesting a cool but far-fetched idea. They wanted to see our fact checks pop up on live TV.

That kind of automated fact-checking wasn’t possible with the technology available back then, but I liked the idea so much that I hacked together a PowerPoint of how it might look. It showed a guy watching a campaign ad when PolitiFact’s Truth-O-Meter suddenly popped up to indicate the ad was false.

It took 12 years, but our team in the Duke University Reporters’ Lab managed to make the dream come true. Today, Squash (our code name for the project, chosen because it is a nutritious vegetable and a good metaphor for stopping falsehoods) has been a remarkable success. It displays fact checks seconds after politicians utter a claim and it largely does what those readers wanted in 2007.

But Squash also makes lots of mistakes. It converts politicians’ speech to the wrong text (often with funny results) and it frequently stays idle because there simply aren’t enough claims that have been checked by the nation’s fact-checking organizations. It isn’t quite ready for prime time.

As we wrap up four years on the project, I wanted to share some of our lessons to help developers and journalists who want to continue our work. There is great potential in automated fact-checking and I’m hopeful that others will build on our success.

When I first came to Duke in 2013 and began exploring the idea, it went nowhere. That’s partly because the technology wasn’t ready and partly because I was focused on the old way that campaign ads were delivered — through conventional TV. That made it difficult to isolate ads the way we needed to.

But the technology changed. Political speeches and ads migrated to the web and my Duke team partnered with Google, Jigsaw and Schema.org to create ClaimReview, a tagging system for fact-check articles. Suddenly we had the key elements that made instant fact-checking possible: accessible video and a big database of fact checks.

I wasn’t smart enough to realize that, but my colleague Mark Stencel, the co-director of the Reporters’ Lab, was. He came into my office one day and said ClaimReview was a game changer. “You realize what you’ve done, right? You’ve created the magic ingredient for your dream of live fact-checking.” Um … yes! That had been my master plan all along!

Fact-checkers use the ClaimReview tagging system to indicate the person and claim being checked, which not only helps Google highlight the articles in search results, it also makes a big database of checks that Squash can tap.

It would be difficult to overstate the technical challenge we were facing. No one had attempted this kind of work beyond doing a demo, so there was no template to follow. Fortunately we had a smart technical team and some generous support from the Knight Foundation, Craig Newmark and Facebook.

Christopher Guess, our wicked-smart lead technologist, had to invent new ways to do just about everything, combining open-source tools with software that he built himself. He designed a system to ingest live TV and process the audio for instant fact-checking. It worked so fast that we had to slow down the video.

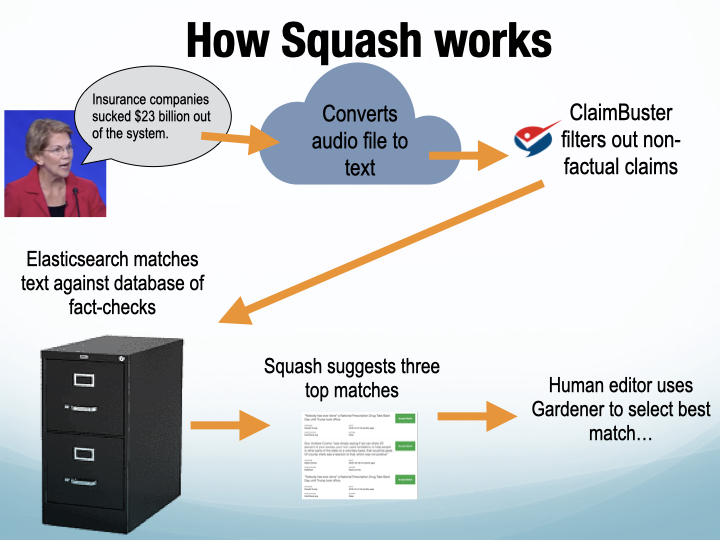

To reduce the massive amount of computer processing, a team of students led by Duke computer science professor Jun Yang came up with a creative way to filter out sentences that did not contain factual claims. They used ClaimBuster, an algorithm developed at the University of Texas at Arlington, to act like a colander that kept only good factual claims and let the others drain away.

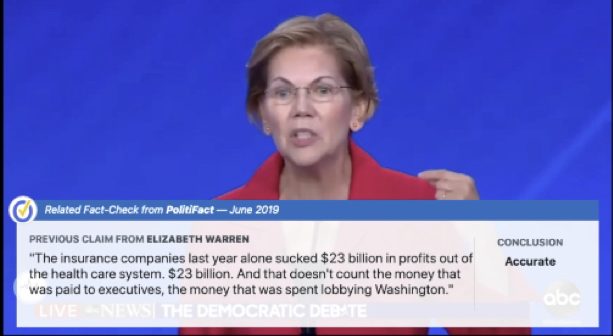

Today, this is how Squash works: It “listens” to a speech or debate, sending audio clips to Google Cloud that are converted to text. That text is then run through ClaimBuster, which identifies sentences the algorithm believes are good claims to check. They are compared against the database of published fact checks to look for matches. When one is found, a summary of that fact check pops up on the screen.

The first few times you see the related fact check appear on the screen, it’s amazing. I got chills. I felt was getting a glimpse of the future. The dream of those PolitiFact readers from 2007 had come true.

But …

Look a little closer and you will quickly realize that Squash isn’t perfect. If you watch in our web mode, which shows Squash’s AI “brain” at work, you will see plenty of mistakes as it converts voice to text. Some are real doozies.

Last summer during the Democratic convention, former Iowa Gov. Tom Vilsack said this: “The powerful storm that swept through Iowa last week has taken a terrible toll on our farmers ……”

But Squash (it was really Google Cloud) translated it as “Armpit sweat through the last week is taking a terrible toll on our farmers.”

Squash’s matching algorithm also makes too many mistakes finding the right fact check. Sometimes it is right on the money. It often correctly matched then-President Donald Trump’s statements on China, the economy and the border wall.

But other times it comes up with bizarre matches. Guess and our project manager Erica Ryan, who spends hours analyzing the results of our tests, believe this often happens because Squash mistakenly thinks an individual word or number is important. (Our all-time favorite was in our first test, when it matched a sentence by President Trump about men walking on the moon with a Washington Post fact-check about the bureaucracy for getting a road permit. The match occurred because both included the word years.)

To reduce the problem, Guess built a human editing tool called Gardener that enables us to weed out the bad matches. That helps a lot because the editor can choose the best fact check or reject them all.

The most frustrating problem is that a lot of time, Squash just sits there, idle, even when politicians are spewing sentences packed with factual claims. Squash is working properly, Guess assures us, it just isn’t finding any fact checks that are even close. This happened in our latest test, a news conference by President Joe Biden, when Squash could muster only two matches in more than an hour.

That problem is a simple one: There simply are not enough published fact checks to power Squash (or any other automated app).

We need more fact checks – As I noted in the previous section, this is a major shortcoming that will hinder anyone who wants to draw from the existing corpus of fact checks. Despite the steady growth of fact-checking in the United States and around the world, and despite the boom that occurred in the Trump years, there simply are not enough fact checks of enough politicians to provide enough matches for Squash and similar apps.

We had our greatest success during debates and party conventions, events when Squash could draw from a relatively large database of checks on the candidates from PolitiFact, FactCheck.org and The Washington Post. But we could not use Squash on state and local events because there simply were not enough fact-checks for possible matches.

Ryan and Guess believe we need dozens of fact checks on a single candidate, across a broad range of topics, to have enough to make Squash work.

More armpit sweat is needed to improve voice to text – We all know the limitations of Siri, which still translates a lot of things wrong despite years of tweaks and improvements by Apple. That’s a reminder that improving voice-to-text technology remains a difficult challenge. It’s especially hard in political events when audio can be inconsistent and when candidates sometimes shout at each other. (Identifying speakers in debates is yet another problem.)

As we currently envision Squash and this type of automated fact-checking, we are reliant on voice-to-text translations, but given the difficulty of automated “hearing,” we’ll have to accept a certain error level for the foreseeable future.

Matching algorithms can be improved – This is one area that we’re optimistic about. Most of our tests relied on off-the-shelf search engines to do the matching, until Guess began to experiment with a new approach to improve the matching. That approach relies on subject tags (which unfortunately are not included in ClaimReview) to help the algorithm make smarter choices and avoid irrelevant choices.

The idea is that if Squash knows the claim is about guns, it would find the best matches from published fact checks that have been tagged under the same subject. Guess found this approach promising but did not get a chance to try the approach at scale.

Until the matching improves, we’ve found humans are still needed to monitor and manage anything that gets displayed — as we did with our Gardener tool.

Ugh, UX – The simplest part of my vision, the Truth-O-Meter popping up on the screen, ended up being one of our most complex challenges. Yes, Guess was able to make the meter or the Washington Post Pinocchios pop up, but what were they referring to? This question of user experience was tricky in several ways.

First, we were not providing an instant fact check of the statement that was just said. We were popping up a summary of a related fact check that was previously published. Because politicians repeat the same talking points, the statements were generally similar and in some cases, even identical. But we couldn’t guarantee that, so we labeled the pop-up “Related fact-check.”

Second, the fact check appeared during a live, fast-moving event. So we realized it could be unclear to viewers which previous statement the pop-up referred to. This was especially tricky in a debate when candidates traded competing factual claims. The pop-up could be helpful with either of them. But the visual design that seemed so simple for my PowerPoint a decade earlier didn’t work in real life. Was that “False” Truth-O-Meter for the immigration statement Biden said? Or the one that Trump said?

Another UX problem: To give people time to read all the text (the related fact checks sometimes had lengthy statements), Guess had them linger on the screen for 15 seconds. And our designer Justin Reese made them attractive and readable. But by the end of that time the candidates might have said two more factual claims, further confusing viewers that saw the “False” meter.

So UX wasn’t just a problem, it was a tangle of many problems involving limited space on the screen (What should we display and where? Will readers understand the concept that the previous fact check is only related to what was just said?), time (How long should we display it in relation to when the politician spoke?) and user interaction (Should our web version allow users to pause the speech or debate to read a related fact check?). It’s an enormously complicated challenge.

* * *

Looking back at my PowerPoint vision of how automated fact-checking would work, we came pretty close. We succeeded in using technology to detect political speech and make relevant fact checks automatically pop up on a video screen. That’s a remarkable achievement, a testament to groundbreaking work by Guess and an incredible team.

But there are plenty of barriers that make it difficult for us to realize the dream and will challenge anyone who tries to tackle this in the future. I hope others can build on our successes, learn from our mistakes, and develop better versions in years to come.

MediaReview Testing Expands to a Global Userbase

The Duke Reporters’ Lab is launching the next phase of development of MediaReview, a tagging system that fact-checkers can use to identify whether a video or image has been manipulated.

By - June 3, 2021

The Duke Reporters’ Lab is launching the next phase of development of MediaReview, a tagging system that fact-checkers can use to identify whether a video or image has been manipulated.

Conceived in late 2019, MediaReview is a sibling to ClaimReview, which allows fact-checkers to clearly label their articles for search engines and social media platforms. The Reporters’ Lab has led an open development process, consulting with tech platforms like Google, YouTube and Facebook, and with fact-checkers around the world.

Testing of MediaReview began in April 2020 with the Lab’s FactStream partners: PolitiFact, FactCheck.org and The Washington Post. Since then, fact-checkers from those three outlets have logged more than 300 examples of MediaReview for their fact-checks of images and videos.

We’re ready to expand testing to a global audience and we’re pleased to announce that fact-checkers can now add MediaReview to their fact-checks through Google’s Fact Check Markup Tool, a tool which many of the world’s fact-checkers currently use to create ClaimReview. This will bring MediaReview testing to more fact-checkers around the world, the next step in the open process that will lead to a more refined final product.

ClaimReview was developed through a partnership of the Reporters’ Lab, Google, Jigsaw, and Schema.org. It provides a standard way for publishers of fact-checks to identify the claim being checked, the person or entity that made the claim, and the conclusion of the article. This standardization enables search engines and other platforms to highlight fact-checks, and can power automated products such as the FactStream and Squash apps being developed in the Reporters’ Lab.