Fact-checking count tops 300 for the first time

The Reporters' Lab finds fact-checkers at work in 84 countries -- but growth in the U.S. has slowed

By Mark Stencel and - October 13, 2020

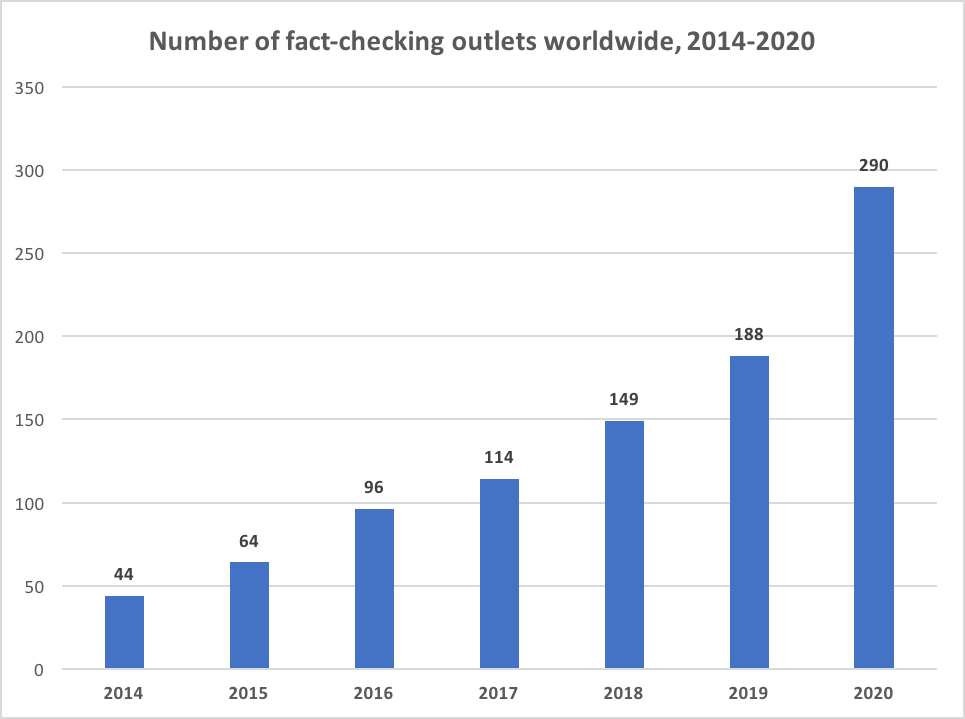

The number of active fact-checkers around the world has topped 300 — about 100 more than the Duke Reporters’ Lab counted this time a year ago.

Some of that growth is due to the 2020 election in the United States, where the Lab’s global database and map now finds 58 fact-checking projects. That’s more than twice as many as any other country, and nearly a fifth of the current worldwide total: 304 in 84 countries.

But the U.S. is not driving the worldwide increase.

The last U.S. presidential election sounded an alert about the effects of misinformation, especially on social media. But those concerns weren’t just “made in America.” From the 2016 Brexit vote in the U.K. to this year’s coronavirus pandemic, events around the globe have led to new fact-checking projects that call out rumors, debunk hoaxes and help the public identify falsehoods.

The current fact-checking tally is up 14 from the 290 the Lab reported in its annual fact-checking census in June.

Over the past four years, growth in the U.S. has been sluggish — at least compared with other parts of the world, where Facebook, WhatsApp and Google have provided grants and incentives to enlist fact-checkers help in thwarting misinformation on their platforms. (Disclosure: Facebook and Google also provided support for research at the Reporters’ Lab.)

By looking back at the dates when each fact-checker began publishing, we now see there were about 145 projects in 59 countries that were active at some point in 2016. Of that 145, about a third were based in the United States.

The global total more than doubled from 2016 to now. And the number outside the U.S. increased two and half times — from 97 to 246.

During that same four years, there were relatively big increases elsewhere. Several countries in Asia saw big growth spurts — including Indonesia (which went from 3 fact-checkers to 9), South Korea (3 to 11) and India (3 to 21).

In comparison, the U.S. count in that period is up from 48 to 58.

The comparison is also striking when counting the fact-checkers by continent. The number in South America doubled while the counts for Africa and Asia more than tripled. The North American count was up too — by a third. But the non-U.S. increase in North America was more in line with the pace elsewhere, nearly tripling from 5 to 14.

These global tallies leave out 19 other fact-checkers that launched since 2016 that are no longer active. Among those 19 were short-lived, election-focused initiatives, sometimes involving multiple news partners, in France, Norway, Mexico, Sweden, Nigeria, Philippines, Argentina and the European Union.

Several factors seem to account for the slower growth in the U.S. For instance, many of the country’s big news media outlets have already done fact-checking for years, especially during national elections. So there is less room for fact-checking to grow at that level.

USA Today was among the only major media newcomers to the national fact-checking scene in the U.S. since 2016. The others were more niche, including The Daily Caller’s Check Your Fact, the Poynter Institute’s MediaWise Teen Fact-Checking Network and The Dispatch. In addition, the French news service AFP started a U.S.-based effort as part of its efforts to establish fact-checking teams in many of its dozens of international bureaus. The National Academies of Sciences, Engineering and Medicine also launched a fact-checking service called “Based on Science” — one of a number of science- and health-focused fact-checking projects around the world.

Of the 58 U.S. fact-checkers, 36 are focused on state and local politics, especially during regional elections. While some of these local media outlets have been at it for years, including some of PolitiFact’s longstanding state-level news partners, others work on their own, such as WISC-TV in Madison, Wisconsin, which began its News 3 Reality Check segments in 2004. There also are one-off election projects that come to an end as soon as the voting is over.

A wildcard in our Lab’s current U.S. count are efforts to increase local fact-checking across large national news chains. One such newcomer since the 2016 election is Tegna, a locally focused TV company with more than 50 stations across the country. It encourages its stations’ news teams to produce fact-checking reports as part of the company’s “Verify” initiative — though some stations do more regular fact-checking than others. Tegna also has a national fact-checking team that produces segments for use by its local stations. A few other media chains are mounting similar efforts, including some of the local stations owned by Nexstar Inc. and more than 260 newspapers and websites operated by USA Today’s owner, Gannett. Those are promising signs.

There’s still plenty of room for more local fact-checking in the U.S. At least 20 states have one or more regionally focused fact-checking projects already. The Reporters’ Lab is keeping a watchful eye out for new ventures in the other 30.

Note about our methodology: Here’s how we decide which fact-checkers to include in the Reporters’ Lab database. The Lab continually collects and new information about the fact-checkers it identifies, such as when they launched and how long they last. That’s why the updated numbers for 2016 used in this article are higher than the counts the Lab reported annual fact-checking census from February 2017. If you have questions or updates, please contact Mark Stencel or Joel Luther.

Related Links: Previous fact-checking census reports

‘It’s great to be here in Norway!’

For the kickoff of Global Fact 7, a celebration of the IFCN's community.

By Bill Adair - June 22, 2020

My opening remarks for Global Fact 7, delivered from Oslo, Norway*, on June 22, 2020.

I’m going to do something a little different this year. I’m going to fact-check my own opening remarks using this antique PolitiFact Pants on Fire button. You know Pants on Fire – it’s our rating for the most ridiculous falsehoods.

First , I want to say that it’s great to be here in Norway! (Pants on Fire!)

I love Norway because I’ve always loved the great plastic furniture I buy from your famous furniture store IKEA! (Pants on Fire!)

I am accompanied today by this Norwegian gnome, which I know is very much a symbol of your great country! (Pants on Fire!)

Okay….I’m actually here in Durham, North Carolina,, but thanks to the magic of pixels and…Baybars!…I’m with you!

First, some news…

As you probably know, every year before Global Fact, the Duke Reporters’ Lab conducts a census of fact-checking to count the world’s fact-checkers and we are out today with the number. This is the product of painstaking work by Mark Stencel, Joel Luther, Mimi Goldstein and Matthew Griffin. Our count this year is 290 fact-checking projects in 83 countries. That’s up from 188 in 60 countries a year ago.

I heard that and my first thought was that …. fact-checking keeps growing!

I should note that Mark Stencel has been working hard on this, staying up all night for the last few days to get it done. So I should reveal my secret: we pay him in coffee!

This morning, I’m going to talk briefly about community.

Back in 2014, when we started planning the first meeting of the world’s fact-checkers – in which we could all squeeze into a classroom at the London School of Economics – Tom Glaisyer of the Democracy Fund gave me some important advice. Build a community, Tom said, not an association. The way to help the fact-checking movement was to be inviting and encourage journalists to start fact-checking. We’ve done that because this meeting, and our group, keeps getting larger. You could even say that … fact-checking keeps growing!

And as a bonus, we also managed to establish the Code of Principles, which provides an important incentive for transparency and fairness in your fact-checking.

My favorite example of the IFCN’s spirit of community is the simplest: our email threads. They are often amazing! A fact-checker will write with a problem they are having and community members from all over the world will respond with suggestions and even help them do the work.

Did you see the amazing one a couple of months ago? Samba of Africa Check wrote about a video that claimed to show violence against Africans in China. He knew it was fake but was not sure where it was from, so he circulated the video by email. That led to a remarkable exchange.

A coordinator from Witness in the United States said the video had been posted on Reddit. suggesting it was from New York. Jacques Pezet of Liberation in France took the image and used Google Maps to find the New York intersection where it was filmed. And then Gordon Farrer, an Australian researcher, used Google Street View to identify the business – a dental office called Brace Yourself.

All of this showed up in Samba’s fact-check in Africa Check in Senegal.

Amazing! All the product of our community!

Another great example: the tremendous work by the IFCN bringing together the world’s fact-checkers to debunk falsehoods about COVID-19. The CoronaVirus Alliance has now collected more than 6,000 fact-checks. It, too, is a product of community, organized by Baybars and Cris Tardaguila.

And one more: MediaReview. You’re going to hear about it tomorrow. It grew out of some great work by the Washington Post and we’ve been working on it with Baybars and PolitiFact and FactCheck.org and fact-checkers from around the world that attended a meeting in January. It’s a new tagging system like ClaimReview that you’ll be able to use for videos and images. I’m more excited about MediaReview than anything I’ve done since PolitiFact because it could really have an impact in the battle against misinformation.

Finally, I want to give some shoutouts to two marvelous people who embody this commitment to community. Peter Cunliffe-Jones has been an amazing builder who has done extraordinary things to bring fact-checking to Africa. And Laura Zommer has been tireless helping dozens of fact-checkers get started in Latin America.

Together, they show what’s wonderful about the IFCN: they believe in our important journalism and they have given their time and energy to help it grow.

Our community grows thanks to these wonderful leaders. I look forward to sharing a glass of wine with them — and you — next year in Oslo!

Bundle up!

*Actually, these remarks were delivered from my backyard in Durham, North Carolina.

Annual census finds nearly 300 fact-checking projects around the world

Growth is fueled by politics, protests and pandemic

By Mark Stencel and - June 22, 2020

With elections, unrest and a global pandemic generating a seemingly endless supply of falsehoods, the Duke Reporters’ Lab finds at least 290 fact-checking projects are busy debunking those threats in 83 countries.

That count is up from 188 active projects in more than 60 countries a year ago, when the Reporters’ Lab issued the annual census at the Global Fact Summit in South Africa. There has been so much growth that the number of active fact-checkers added in the past year alone more than doubles the total number when the Lab began to keep track in 2014.

There has been plenty of news to keep those fact-checkers busy, including widespread protests in countries such as Chile and the United States. Events like these attract a broad range of new fact-checkers — some from well-established media companies, as well as industrious startups, good-government groups and journalism schools.

Our global database and map shows considerable growth in Asia, particularly India, where the Lab currently counts at least 20 fact-checkers at the moment. We also saw a spike in Chile that started with the nationwide unrest there last fall.

Fact-Checkers by Continent Since June 2019

Africa: 9 to 19

Asia: 35 to 75

Australia: 5 to 4

Europe: 61 to 85

North America: 60 to 69

South America: 18 to 38

But the coronavirus pandemic has been the story that has topped almost every fact-checking page in every country since February.

At least five fact-checkers on the Lab’s map already focused on public health and medical claims. One of the newest is The Healthy Indian Project, which launched last year. But the pandemic has turned almost every fact-checking operation into a team of health reporters. And the International Fact-Checking Network also has coordinated coverage through its #CoronaVirusFacts Alliance.

The pandemic has also turned IFCN’s 2020 Global Fact meeting into a virtual conference this week, instead of the in-person gathering originally planned in Oslo, Norway. And one of the themes participants will be talking about are the institutional factors that have generated more interest and attention for fact-checkers.

To combat increasing online misinformation, major digital platforms in the United States, including Facebook, WhatsApp, Google and YouTube, have provided incentives to fact-checkers, including direct contributions, competitive grants and help with technological infrastructure to increase the distribution of their work. (Disclosure: Facebook and Google separately help fund research and development projects at the Reporters’ Lab, and the Lab’s co-directors have served as judges for some grants.)

Many of these funding opportunities were specifically for signatories of the IFCN’s Code of Principles, a charter that requires independent accessors to regularly review the editorial and ethical practices of each fact-checker that wants its status verified.

A growing number of fact-checkers are also part of national and regional partnerships. These short-term collaborations can evolve into longer-term partnerships, as we’ve seen with Brazil’s Comprova, Colombia’s RedCheq and Bolivia Verifica. They also can inspire participating organizations to continue fact-checking on their own.

Over time, the Reporters’ Lab has tried to monitor these contributors and note when they have developed into fact-checkers that should be listed in their own right. That’s why our database shows considerable growth in South Korea — home to the long-standing SNU FactCheck partnership based at Seoul National University’s Institute of Communications Research.

As has been the case with each year’s census, some of the growth also comes from established fact-checkers that came to the attention of the Reporters’ Lab after last June’s census was published — offset by at least nine projects that closed down in the months that followed.

But the overall trend was still strong. Overall, 68 of the projects in the database launched since the start of 2019. And more than half of them (40 of the 68) opened for business after the 2019 census, including 11 so far in the first half of 2020. And most of them appear to have staying power. Of those 68, only four are no longer operating. And three of those were election-related collaborations that launched as intentionally short-term projects.

We also have tried to be more thorough about discerning among specific projects and outlets that are produced or distributed by different teams within the same or related organizations. The variety of strong fact-checking programs and web pages produced by variously affiliated French public broadcasters is a good example. (Here’s how we decide which fact-checkers to include in the Reporters’ Lab database.)

The increasing tally of fact-checkers, which continues a trend that started in 2014, is remarkable. While this is a challenging time for journalism in just about every country, public alarm about the effects of misinformation is driving demand for credible reporting and research — the work a growing list of fact-checkers are busy doing around the world.

The Reporters’ Lab is grateful for the contributions of student researchers Amelia Goldstein and Matthew Griffin; journalist and media/fact-checking trainer Shady Gebril; fact-checkers Enrique Núñez-Mussa of FactCheckingCL and EunRyung Chong of SNU FactCheck; and the staff of the International Fact-Checking Network. The Reporters’ Lab updates its fact-checking database throughout the year. If you have updates or information, please contact Mark Stencel and Joel Luther.

Related Links: Previous fact-checking census reports

Pop-up fact-checking moves online: Lessons from our user experience testing

After it became clear pop-up fact-checking was too difficult to display on a TV, we've moved to the web.

By Jessica Mahone - June 11, 2020

We initially wanted to build pop-up fact-checking for a TV screen. But for nearly a year, people have told us in surveys and in coffee shops that they like live fact-checking but they need more information than they can get on a TV.

The testing is a key part of our development of Squash, our groundbreaking live fact-checking product. We started by interviewing a handful of users of our FactStream app. We wanted to know how they found out about the app, how they find fact checks about things they hear on TV, and what they would need to trust live fact-checking. As we saw in our “Red Couch Experiments” in 2018, they were excited about the concept but they wanted more than a TV screen allowed.

We supplemented those interviews with conversations in coffee shops – “guerilla research” in user experience (UX) terms. And again, the people we spoke with were excited about the concept but wanted more information than a 1740×90 pixel display could accommodate.

The most common request was the ability to access the full published fact-check. Some wanted to know if more than one fact-checker had vetted the claim, and if so, did they all reach the same conclusion? Some just wanted to be able to pause the video.

Since those things weren’t possible with a conventional TV display, we pivoted and began to imagine what live fact-checking would look like on the web.

Bringing Pop-Up Fact-Checking to the Web

In an online whiteboard session, our Duke Tech and Check Cooperative team discussed many possibilities for bringing live fact-checking online, and then, our UX team — students Javan Jiang and Dora Pekec and myself — designed a new interface for live fact-checking and tested it in a series of simple open-ended preference surveys.

In total, 100 people responded to these surveys, in addition to the eight interviews above and a large experiment with 1,500 participants we did late last year about whether users want ratings in on-screen displays (they do).

A common theme emerged in the new research: Make live fact-checking as non-disruptive to the viewing experience as possible. More specifically, we found three things that users want and need from the live fact-checking experience.

- Users prefer a fact-checking display beneath the video. In our initial survey, users could choose if they liked a display beside or beneath the video. About three-quarters of respondents said that a display beneath the video was less disruptive to their viewing, with several telling us that this placement was similar to existing video platforms such as YouTube.

- Users need “persistent onboarding” to make use of the content they get from live fact-checking. A user guide or FAQ is not enough. Squash can’t yet provide real-time fact-checking. It is a system that matches claims made during a televised event to claims previously checked. But users need to be reminded that they are seeing a “related fact-check,” not necessarily a perfect match to the claim they just heard. “Persistent onboarding” means providing users with subtle reminders in the display. For example, when a user hovers over the label “Related Fact Check,” a small box could explain that this is not a real-time fact check but an already published fact check about a similar claim made in the past. This was one of the features users liked most because it kept them from having to find the information themselves.

- Users prefer all the information that is available on the initial screen. Our first test allowed users to expand the display to see more information about the fact check, such as the publisher of the fact check and an explanation of what statement triggered the system to display a fact check. But users said that having to toggle the display to see this information was disruptive.

More to Learn

Though we’ve learned a lot, some big questions remain. We still don’t know what live fact-checking looks like under less-than-ideal conditions. For example, how would users react to a fact check when the spoken claim is true but the relevant fact check is about a claim that was false?

And we need to figure out timing, particularly for multi-speaker events such as debates. When is the right time to display a fact-check after a politician has spoken? And what if the screen is now showing another politician?

And how can we appeal to audiences that are skeptical of fact-checking? One respondent specifically said he’d want to be able to turn off the display because “none of the fact-checkers are credible.” What strategies or content would help make such audiences more receptive to live fact-checking?

As we wrestle with those questions, moving live fact-checking to the web still opens up new possibilities, such as the ability to pause content (we call that “DVR mode”), read fact-checks, and return to the event. We are hopeful this shift in platform will ultimately bring automated fact-checking to larger audiences.

What is MediaReview?

FAQs on the new schema we're helping to develop for fact-checks of images and videos.

By - June 11, 2020

MediaReview is a schema – a tagging system that web publishers can use to identify different kinds of content. Built specifically for fact-checkers to identify manipulated images and videos, we think of it as a sibling to ClaimReview, the schema developed by the Reporters’ Lab that allows fact-checkers to identify their articles for search engines and social media platforms.

By tagging their articles with MediaReview, publishers are essentially telling the world, “this is a fact-check of an image or video that may have been manipulated.” The goal is twofold: to allow fact-checkers to provide information to the tech platforms that a piece of media has been manipulated, and to establish a common vocabulary to describe types of media manipulation.

We hope these fact-checks will provide the tech companies with valuable new signals about misinformation. We recognize that they are independent from the journalists doing the fact-checking and it is entirely up to them if, and how, they use the signals. Still, we’re encouraged by the interest of the tech companies in this important journalism. By communicating clearly with them in consistent ways, independent fact-checkers can play an important role in informing people around the world.

Who created MediaReview?

The idea for a taxonomy to describe media manipulation was first proposed at our 2019 Tech & Check conference by Phoebe Connelly and Nadine Ajaka of the Washington Post. Their work eventually became The Fact Checker’s Guide to Manipulated Video, which heavily inspired the first MediaReview proposal.

The development of MediaReview has been an open process. A core group of representatives from the Reporters’ Lab, the tech companies, and the Washington Post led the development, issuing open calls for feedback throughout the process. We’ve worked closely with the International Fact Checking Network to ensure that fact-checkers operating around the world have been able to provide feedback.

You can still access the first terminology proposal and the first structured data proposal, as well as comments offered on those documents.

What is the current status of MediaReview?

MediaReview is currently in pending status on Schema.org, which oversees the tagging that publishers use, which means it is still under development.

The Duke Reporters’ Lab is testing the current version of MediaReview with several key fact-checkers in the United States: FactCheck.org, PolitiFact and The Washington Post.

You can see screenshots of our current MediaReview form, including working labels and definitions here: Claim Only, Video, Image.

We’re also sharing test MediaReview data as it’s entered by fact-checkers. You can access a spreadsheet of fact-checks tagged with MediaReview here.

How can I offer feedback?

Through our testing with fact-checkers and with an ever-expanding group of misinformation experts, we’ve identified a number of outstanding issues that we’re soliciting feedback on. Please comment on the linked Google Doc with your thoughts and suggestions.

We’re also proposing new Media Types and Ratings to address some of the outstanding issues, and we’re seeking feedback on those as well.

We want your feedback on the MediaReview tagging system

The new tagging system will allow fact-checkers to alert tech platforms about false videos and fake images.

By Bill Adair - June 9, 2020

Last fall, we launched an ambitious effort to develop a new tagging system for fact-checks of fake videos and images. The idea was to take the same approach that fact-checkers use when they check claims by politicians and political groups, a system called ClaimReview, and build something of a sequel. We called it MediaReview.

For the past nine months, Joel Luther, Erica Ryan and I have been talking with fact-checkers, representatives of the tech companies and other leaders in the battle against misinformation. Our ever-expanding group has come up with a great proposal and would love your feedback.

Like ClaimReview, MediaReview is schema – a tagging system that web publishers can use to identify different kinds of content. By tagging their articles, the publishers are essentially telling the world, “This is a fact-check on this politician on this particular claim.” That can be a valuable signal to tech companies, which can decide if they want to add labels to the original content or demote its standing in a feed, or do nothing. It’s up to them.

(Note: Google and Facebook have supported the work of The Reporters’ Lab and have given us grants to develop MediaReview.)

ClaimReview, which we developed with Google and Schema.org five years ago, has been a great success. It is used by more than half of the world’s fact-checkers and has been used to tag more than 50,000 articles. Those articles get highlighted in Google News and in search results on Google and YouTube.

We’re hopeful that MediaReview will be equally successful. By responding quickly to fake videos and bogus images, fact-checkers can provide the tech platforms with vital information about false content that might be going viral. The platforms can then decide if they want to take action.

The details are critical. We’ve based MediaReview on a taxonomy developed by the Washington Post. We’re still discussing the names of the labels, so feel free to make suggestions about the labels – or anything.

You can get a deeper understanding of MediaReview in this article in NiemanLab.

You can see screenshots of our current MediaReview form, including working labels and definitions here: Claim Only, Video, Image.

You can see our distillation of the current issues and add your comments here.

Update: 237 fact-checkers in nearly 80 countries … and counting

So far the Reporters' Lab list is up 26% over last's year annual tally.

By Mark Stencel and - April 3, 2020

Fact-checking has expanded to 78 countries, where the Duke Reporters’ Lab counts at least 237 organizations that actively verify the statements of public figures, track political promises and combat misinformation.

So far, that’s a 26% increase in the 10 months since the Reporters’ Lab published its 2019 fact-checking census. That was on eve of last summer’s annual Global Fact summit in South Africa, when our international database and map included 188 active fact-checkers in more than 60 countries.

We know that’s an undercount because we’re still counting. But here’s where we stand by continent:

Africa: 17

Asia: 53

Australia: 4

Europe: 68

North America: 69

South America: 26

About 20 fact-checkers listed in the database launched since last summer’s census. One of the newest launched just last week: FACTA, a spinoff of longtime Italian fact-checker Pagella Politica that will focus broadly on online hoaxes and disinformation.

The Lab’s next annual census will be published this summer, when the International Fact Checking Network hosts an online version of Global Fact. On Wednesday, the network postponed the in-person summit in Norway, scheduled for June, because of the coronavirus pandemic.

Several factors are driving the growth of fact-checking.

One is the increasing spread of misinformation on large digital media platforms, some of which are turning to fact-checkers for help — directly and indirectly. That includes a Facebook partnership that enlists participating “third-party” fact-checkers to help respond to some categories of misleading information flagged by its users. Another example is ClaimReview, an open-source tagging system the Reporters’ Lab helped develop that makes it easier for Google and other platforms to spotlight relevant fact-checks and contradict falsehoods. The Reporters’ Lab is developing a related new tagging-system, MediaReview, that will help flag manufactured and misleading use of images, including video and photos. (Disclosure: Facebook and Google are among the funders of the Lab, which develops and deploys technology to help fact-checkers. The Lab collaborated with Schema.org and Google to establish the ClaimReview framework and encourage its adoption.)

Another factor in the growth of fact-checking is the increasing role of collaboration. That includes fact-checking partnerships that involve competing news outlets and media groups that have banded together to share fact-checks or jointly cover political claims, especially during elections. It also includes growing collaboration within large media companies. Examples of those internal partnerships range from Agence France-Presse, the French news service that has established regional fact-checking sites with dedicated reporters in dozens of its bureaus around the world, to U.S.-based TEGNA, whose local TV stations produce and share “Verify” fact-checking segments across more than four dozen outlets.

Sharing content and processes is a positive thing — though it means it’s more difficult for our Lab to keep count. These multi-outlet fact-checking collaborations make it complicated for us to determine who exactly produces what, or to keep track of the individual outlets where readers, viewers and listeners can find this work. We’ll be clarifying our selection process to address that.

We’ll have more to say about the trends and trajectory of fact-checking in our annual census when the Global Fact summit convenes online. Working with a student researcher, Reporters’ Lab director Bill Adair first began tallying fact-checking projects for the first Global Fact summit in 2014. That gathering of about 50 people in London ultimately led a year later to the formation of the International Fact Checking Network, which is based at the Poynter Institute, a media studies and training center in St. Petersburg, Florida.

The IFCN summit itself has become a measure of fact-checkng’s growth. Before IFCN decided to turn this year’s in-person conference into an online event, more than 400 people had confirmed their participation. That would have been about eight times larger than the original London meeting in 2014.

IFCN director Baybars Örsek told fact-checkers Wednesday that the virtual summit will be scheduled in the coming weeks. Watch for our annual fact-checking census then.

Squash report card: Improvements during State of the Union … and how humans will make our AI smarter

We've had some encouraging improvements in the AI powering our experimental fact-checking technology. But to make Squash smarter, we're calling in a human.

By Bill Adair - February 23, 2020

Squash, the experimental pop-up fact-checking product of the Reporters’ Lab, is getting better.

Our live test during the State of the Union address on Feb. 4 showed significant improvement over our inaugural test last year. Squash popped up 14 relevant fact-checks on the screen, up from just six last year.

That improvement matches a general trend we’ve seen in our testing. We’ve had a higher rate of relevant matches when we use Squash on videos of debates and speeches.

But we still have a long way to go. This month’s State of the Union speech also had 20 non-relevant matches, which means Squash displayed fact-checks that weren’t related to what the president said. If you’d been watching at that moment, you probably would have thought, “What is Squash thinking?”

We’re now going to try two ways to make Squash smarter: a new subject tagging system that will be based on a wonderfully addictive game developed by our lead technologist Chris Guess; and a new interface that will bring humans into the live decision-making. Squash will recommend fact-checks to display, but an editor will make the final judgment.

Some background in case you’re new to our project: Squash, part of the Lab’s Tech & Check Cooperative, is a revolutionary new product that displays fact-checks on a video screen during a debate or political speech. Squash “hears” what politicians say, converts their speech to text and then searches a database of previously published fact-checks for one that’s related. When Squash finds one, it displays a summary on the screen.

For our latest tests, we’ve been using Elasticsearch, a tool for building search engines that we’ve made smarter with two filters: ClaimBuster, an algorithm that identifies factual claims, and a large set of common synonyms. ClaimBuster helps Squash avoid wasting time and effort on sentences that aren’t factual claims, and the synonyms help it make better matches.

Guess, assisted by project manager Erica Ryan and student developers Jack Proudfoot and Sanha Lim, will soon be testing a new way of matching that uses natural language processing based on the subject of the fact-check. We believe that we’ll get more relevant matches if the matching is based on subjects rather than just the words in the politicians’ claims.

But to make that possible, we have to put subject tags on thousands of fact-checks in our ClaimReview database. So Guess has created a game called Caucus that displays a fact-check on your phone and then asks you to assign subject tags to it. The game is oddly addictive. Every time you submit one, you want to do another…and another. Guess has a leaderboard so we can keep track of who is tagging the most fact-checks. We’re testing the game with our students and staff, but hope to make it public soon.

We’ve also decided that Squash needs a little human help. Guess, working with our student developer Matt O’Boyle, is building an interface for human editors to control which matches actually pop up on users’ screens.

The new interface would let them review the fact-check that Squash recommends and decide whether to let it pop up on the screen, which should help us filter out most of the unrelated matches.

That should eliminate the slightly embarrassing problem when Squash makes a match that is comically bad. (My favorite: one from last year’s State of the Union when Squash matched the president’s line about men walking on the moon with a fact-check on how long it takes to get a permit to build a road.)

Assuming the new interface works relatively well, we’ll try to do a public demo of Squash this summer.

Slowly but steadily, we are making progress. Watch for more improvements soon.

Reporters’ Lab developing MediaReview, a new tool to combat fake videos and images

Standardizing how fact-checkers tag false videos and images should help search engines and social media companies identify misinformation more quickly.

By Catherine Clabby - January 27, 2020

Misleading, maliciously edited and other fake videos are on the rise around the world.

To help, the Duke Reporters’ Lab is leading a drive to create MediaReview, a new tagging system that will enable fact-checkers to be more consistent when they debunk false videos and images. It should help search engines and social media companies identify fakes more quickly and take prompt action to slow or stop them.

MediaReview is a schema similar to ClaimReview, a tagging system developed by the Reporters’ Lab, Google and Jigsaw that enables fact-checkers to better identify their articles for search engines and social media. MediaReview is based on a video-labeling vocabulary that Washington Post journalists created to describe misleading videos.

The new tagging system will allow fact-checkers to quickly label fake and manipulated videos and images with standardized tags such as “missing context,” “transformed,” “edited,” etc.

Bill Adair and Joel Luther are leading this project at the Reporters’ Lab. You can read about their work in a recent NeimanLab article and in their writing describing the project and why it’s needed:

MediaReview: Translating the video and visual fact-check terminology to Schema.org structured data

MediaReview case study: Gosar and Biden

Here’s more detail on the Washington Post’s pioneering system for labeling misinformation-bearing videos:

Introducing The Fact Checker’s guide to manipulated video

Last thing. If you don’t know much about the importance of ClaimReview, this should catch you up:

Lab launches global effort to expand ClaimReview.

U.S. fact-checkers gear up for 2020 campaign

Of the 226 fact-checking projects in the latest Reporters’ Lab global count, 50 are in the U.S. -- and most are locally focused.

By Mark Stencel and - November 25, 2019

With the U.S. election now less than a year away, at least four-dozen American fact-checking projects plan to keep tabs on claims by candidates and their supporters – and a majority of those fact-checkers won’t be focused on the presidential campaign.

The 50 active U.S. fact-checking projects are included in the latest Reporters’ Lab tally of global fact-checking, which now shows 226 sites in 73 countries. More details about the global growth below.

Of the 50 U.S. projects, about a third (16) are nationally focused. That includes independent fact-checkers such as FactCheck.org, PolitiFact and Snopes, as well as major news media efforts, including the Associated Press, The Washington Post, CNN and The New York Times. There also are a handful of fact-checkers that are less politically focused. They concentrate on global misinformation or specific topic areas, from science to gossip.

At least 31 others are state and locally minded fact-checkers spread across 20 states. Of that 31, 11 are PolitiFact’s state-level media partners. A new addition to that group is WRAL-TV in North Carolina — a commercial TV station that took over the PolitiFact franchise in its state from The News & Observer, a McClatchy-owned newspaper based in Raleigh. Beyond North Carolina, PolitiFact has active local affiliates in California, Florida, Illinois, Missouri, New York, Texas, Vermont, Virginia, West Virginia and Wisconsin.

The News & Observer has not abandoned fact-checking. It launched a new statewide initiative of its own — this time without PolitiFact’s trademarked Truth-O-Meter or a similar rating system for the statements it checks. “We’ll provide a highly informed assessment about the relative truth of the claims, rather than a static rating or ranking,” The N&O’s editors said in an article announcing its new project.

Among the 20 U.S. state and local fact-checkers that are not PolitiFact partners, at least 13 use some kind of rating system.

Of all the state and local fact-checkers, 11 are affiliated with TV stations — like WRAL, which had its own fact-checking service before it joined forces with PolitiFact this month. Another 11 are affiliated with newspapers or magazines. Five are local digital media startups and two are public radio stations. There are also a handful of projects based in academic journalism programs.

One example of a local digital startup is Mississippi Today, a non-profit state news service that launched a fact-checking page for last year’s election. It is among the projects we have added to our database over the past month.

We should note that some of these fact-checkers hibernate between election cycles. These seasonal fact-checkers that have long track records over multiple election cycles remain active in our database. Some have done this kind of reporting for years. For instance, WISC-TV in Madison, Wisconsin, has been fact-checking since 2004 — three years before PolitiFact, The Washington Post and AP got into the business.

One of the hardest fact-checking efforts for us to quantify is run by corporate media giant TEGNA Inc. which operates nearly 50 stations across the country. Its “Verify” segments began as a pilot project at WFAA-TV in the Dallas area in 2016. Now each station produces its own versions for its local TV and online audience. The topics are usually suggested by viewers, with local reporters often fact-checking political statements or debunking local hoaxes and rumors.

A reporter at WCNC-TV in Charlotte, North Carolina, also produces national segments that are distributed for use by any of the company’s other stations. We’ve added TEGNA’s “Verify” to our database as a single entry, but we may also add individual stations as we determine which ones do the kind of fact-checking we are trying to count. (Here’s how we decide which fact-checkers to include.)

A Global Movement

As for the global picture, the Reporters’ Lab is now up to 226 active fact-checking projects around the world — up from 210 in October, when our count went over 200 for the first time. That is more than five times the number we first counted in 2014. It’s also more than double a retroactive count for that same year –- a number that was based on the actual start dates of all the fact-checking projects we’ve added to the database over the past five years (see footnote to our most recent annual census for details).

The growth of Agence France-Presse’s work as part of Facebook’s third-party-fact checking partnership is a big factor. After adding a slew of AFP bureaus with dedicated fact-checkers to our database last month, we added many more — including Argentina, Brazil, Colombia, Mexico, Poland, Lebanon, Singapore, Spain, Thailand and Uruguay. We now count 22 individual AFP bureaus, all started since 2018.

Other recent additions to the database involved several established fact-checkers, including PesaCheck, which launched in Kenya in 2016. Since then it’s added bureaus in Tanzania in 2017 and Uganda in 2018 — both of which are now in our database. We added Da Begad, a volunteer effort based in Egypt that has focused on social media hoaxes and misinformation since 2013. And there’s a relative newcomer too: Re:Check, a Latvian project that’s affiliated with a non-profit investigative center called Re:Baltica. It launched over the summer.

Peru’s OjoBiónico is back on our active list. It resumed fact-checking last year after a two-year hiatus. OjoBiónico is a section of OjoPúblico, a digital news service that focuses on an investigative reporting service.

We already have other fact-checkers we plan to add to our database over the coming weeks. If there’s a fact-checker you know about that we need to update or add to our map, please contact Joel Luther at the Reporters’ Lab.